What is DevOps Anyway?

Traditionally the words of software development, testing (also known as Quality Assurance) and the IT infrastructure needed to support such activities (often called Operations) were separate worlds. The developers would write code based on requirements they were given, testers would test the features based on the same requirements (hopefully?!) and the IT staff would provide the computers, networks, and software needed by the two other groups to perform their activities. They would also be in charge of providing different environments (development, test, staging, production) that could be used by the development and testing teams.

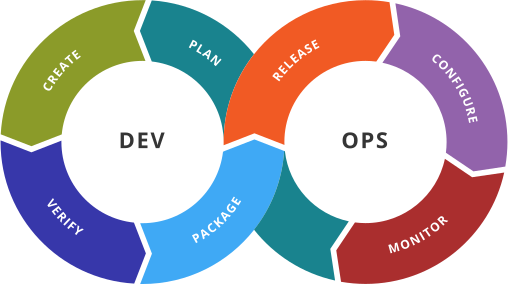

With the rise of agile methodologies such as Scrum, XP and Kanban, these three separate "stove-piped" worlds could no longer exist. The term Application Lifecycle Management (or ALM) was the unification of development and testing into a single process, and the logical next step has been the unification of all three disciplines into a single integrated process called DevOps:

(By Devops.png: Rajiv.Pant derivative work: Wylve - derived from Devops.png: , Link, originally by Gary Stevens)

The goal of DevOps is to automate as many of the steps as possible between an idea being formed and the finished code being released into production. This shrinks the time between someone coming up with the idea of a new product or business and the new product being available in the marketplace. This means that concerns such as provisioning servers and other infrastructure as well as scaling a successful application need to be as automated and seamless as the software development build process.

What are the Elements of DevOps?

There are many different ways of categorizing tools that improve DevOps, however in general it is recognized that the following seven areas need to be considered when looking for different tools that make up what is usually known as the DevOps ToolChain:

- Plan — Plan is composed of two things: "define" and "plan". This activity refers to the business value and application requirements.

- Code / Build — code design and development tools, source code management tools, continuous integration / build servers

- Test / Verify — continuous testing tools and processes that provide feedback on business risks

- Package — artifact repository, application pre-deployment staging

- Release — change management, release approvals, release automation

- Configure — infrastructure configuration and management, Infrastructure as Code tools

- Monitor — applications performance monitoring, end–user experience

Now the relative importance of each of these seven items will vary depending on the type of application (web based, mobile, legacy desktop, micro-services, AI, data warehouses), the methodology being used (continuous build and integration usually requires an agile methodology), and whether the applications are in MVP, early adoption, mainstream adoption or support and maintenance mode.

How Does It Relate to Application Lifecycle Management (ALM)?

One question that we're often asked, is how does DevOps relate to ALM. Well there are many discussions about this topic, but in our minds DevOps is the logical extension of ALM to include the IT / infrastructure that is critical for software development activities to occur, but is often forgotten or taken for granted. The other difference is that for the most part DevOps is such a broad concept that you need a set of different tools to perform all its activities, whereas ALM is often something that a single tool or platform is capable of managing. So ALM tools and vendors are effectively key elements of the overarching DevOps Toolchain.

How does Inflectra do DevOps?

In each of the sections of this whitepaper we have a dedicated section on how we perform DevOps at Inflectra. We describe some of the tools and techniques we have used over the past 12 years for DevOps. Over that time, we have grown as a company, changed environments, moved to the cloud and adopted different approaches and methodologies, and we hope that our successes and mistakes will be helpful to you in making good decisions as your teams grow and evolve.

1. Plan

In this section, we shall be looking at the Planning aspects of DevOps and how you can use a mixture of Inflectra products and other tools and plugins to improve the Plan aspect of the DevOps Toolchain.

Typically, in DevOps, the Plan element is actually composed of two things: "define" and "plan". The first part – Define – refers to the activity needed to come to a common understanding of the application requirements and the inherent business value of the application being developed. The second part – Plan – refers to taking those requirements and developing a set of activities with milestones and roles that will carry out realization of the defining requirements.

The exact nature of the plan will determine on the project management methodology being used, with agile projects using higher-level requirements (called user stories) that are subject to change, and waterfall projects using more prescribed requirements and deadlines that generally don’t change much during the project.

Definition

When it comes to defining the vision, goals and requirements for the project, there are many different approaches that can be used.

For creating the high-level vision and goals, a technique used for projects with lots of stakeholders is a workshop. In this live-forum, stakeholders from the different user communities, together with the product owner, UX designers, developers, and testers come together in a series of cross-functional meetings to define and refine the high-level objectives, constraints, restraints and measurable goals for the project. The advantage of such a forum is that everyone gains ownership of the project and that impediments are identified early.

Other options for defining a project’s vison and goal are smaller informal brainstorming sessions, which can be in-person using whiteboards and other “informal” tools, or virtual using online collaboration tools that allow shared video, audio and workspaces.

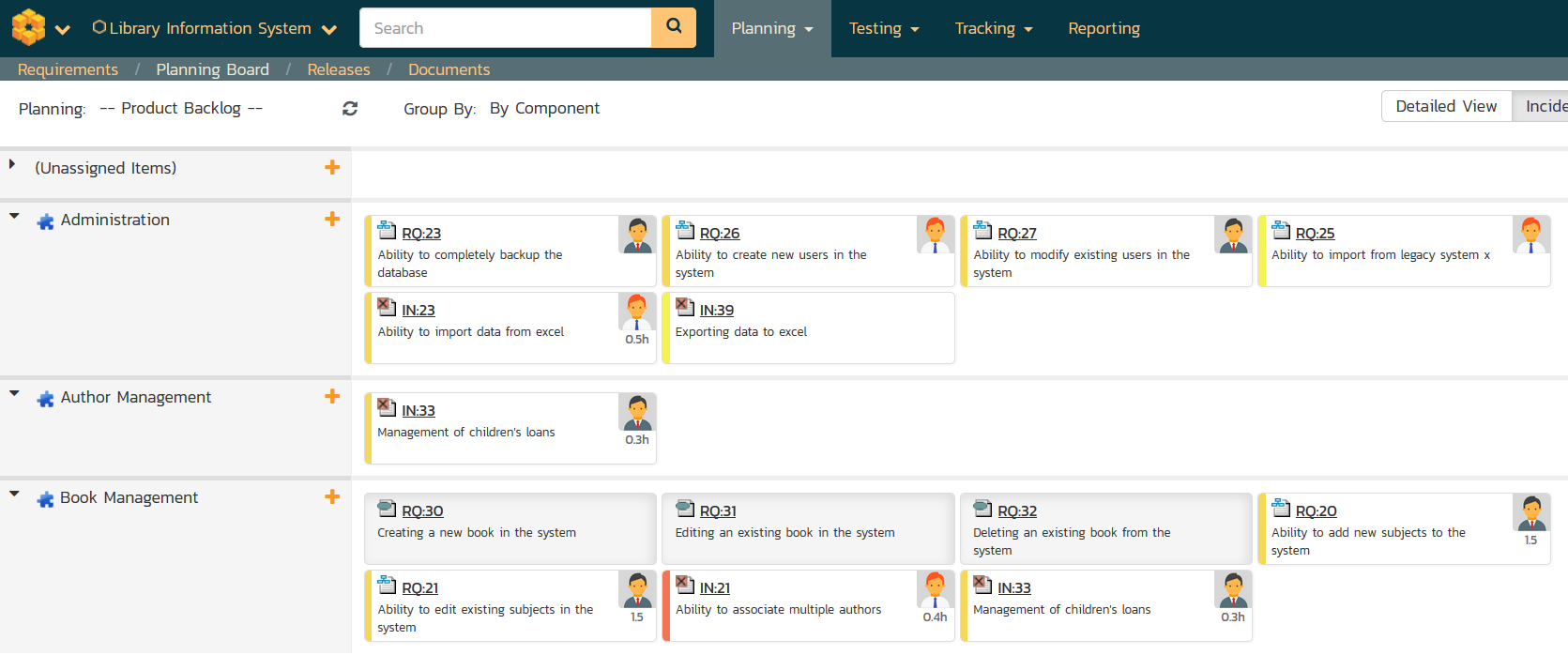

Once the high-level vision and goals have been described, the more detailed requirements for the system are defined. For agile projects, a simple list of user stories grouped by theme and epic often suffices:

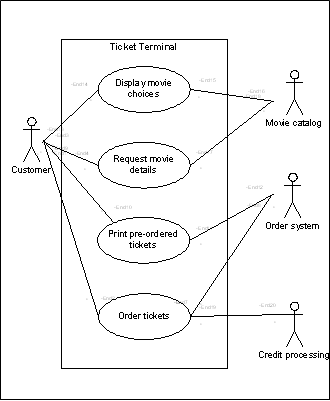

For more complex situations, you may want to use more formal methods such as UML modelling using a dedicated modelling tool, a traditional requirements management system, or a use case repository:

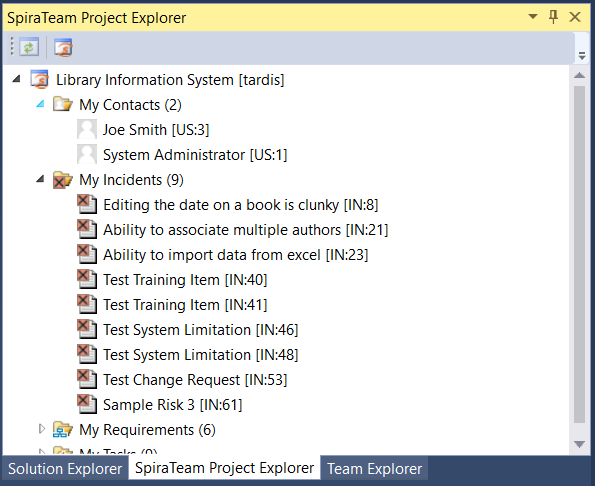

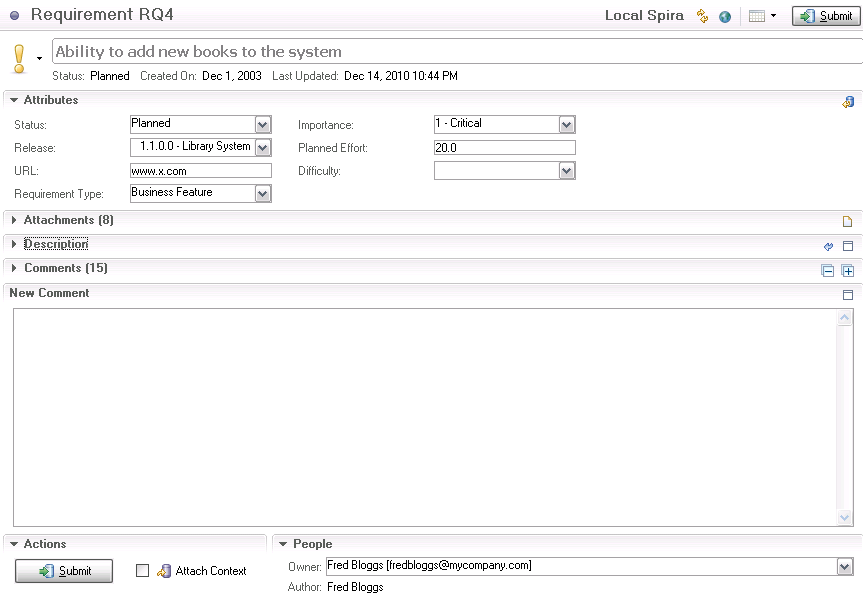

Many of our customers use SpiraTeam heavily in this part of the define process, capturing requirements, user stories, epics, use cases and non-functional requirements (such as constraints) in the system:

Integration with UML modelling tools such as Sparx Enterprise Architect as well as connectors for other requirements capture utilities (Jama, DOORS, RequisitePro, etc.) allow you to use the format that makes sense for your project.

In addition, for most applications you will need to consider the user interface, and UX designers will begin crafting UX wireframes and other mockups to help illustrate the intended functionality.

Planning

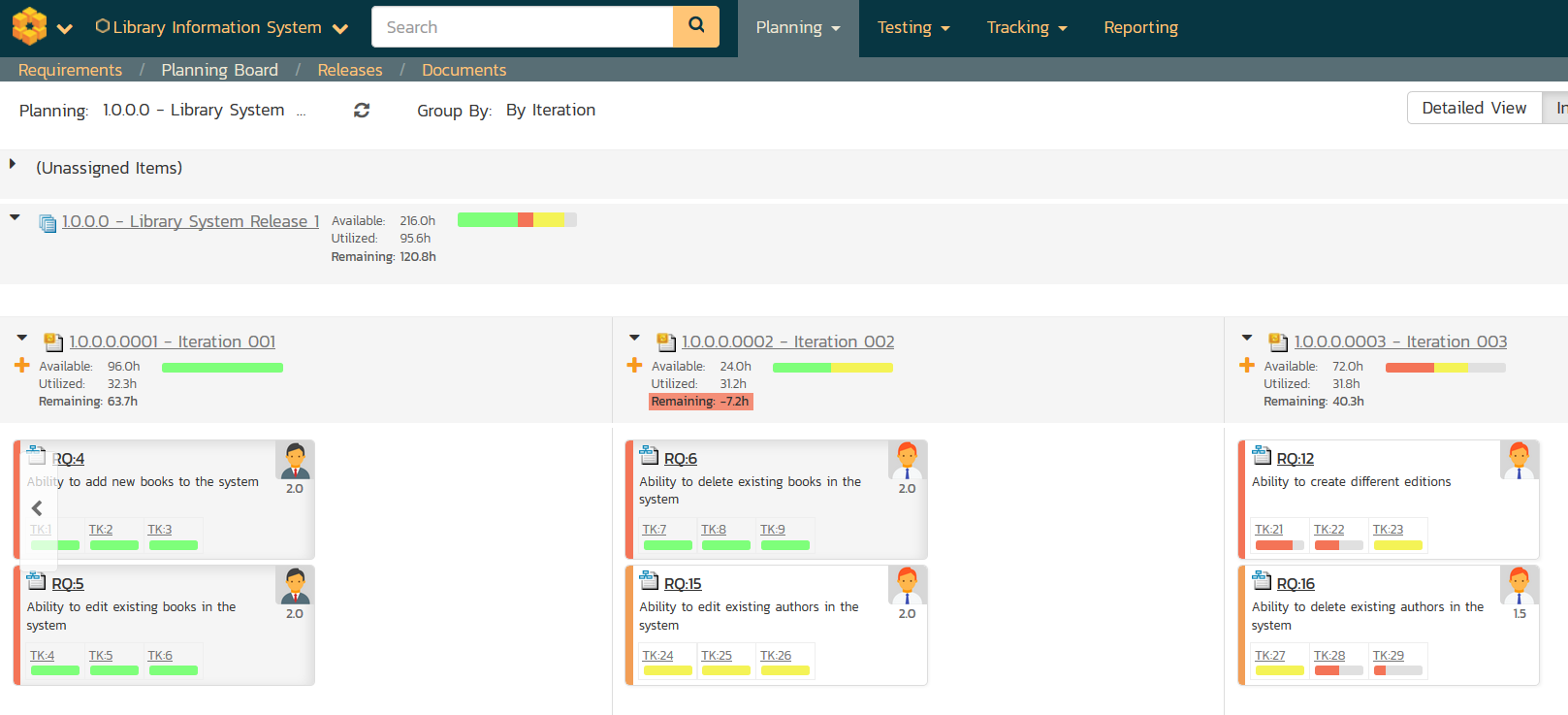

The planning side of the equation will also depend heavily on the software development lifecycle (SDLC) methodology being used. For example, a Scrum agile project will make use of user stories, backlog items, releases, sprints/iterations, tasks, releases and acceptance tests. It will have frequent, well-defined increments of fixed scope delivered on pre-defined scheduled. At the end of each increment (called a sprint), the scope of the subsequent sprints is redefined based on feedback (called a retrospective).

At the other end of the spectrum, a waterfall project will have a prescriptive project plan, with large releases of fixed functional scope containing a detailed requirements specification, detailed upfront design, test plan, and work breakdown structure. The plan will contain a large number of interdependent tasks that have defined dependencies, milestones and critical paths.

The tools used for planning (and ultimately) managing the project should be chosen with the adopted SDLC in mind. Some tools are highly opinioned, and only work with a specific flavor of Agile (Lean, Scrum, Kanban, SAFe, etc.) and others only work with waterfall projects.

SpiraPlan offers a turnkey solution to managing your projects, with support for all flavors of agile, as well as support for waterfall and hybrid (a mixture of agile and waterfall) projects.

2. Code / Build

The development aspects of DevOps are often the ones that we most associate with the DevOps revolution, since they comprise tools and techniques to make the lives of software developers easier, and less reliant on infrastructure from traditional IT organizations.

In this section we shall discuss tools that help developers design, write, compile, debug, manage, version, build, and integrate code. The activities around deployment of code will be covered in sections 4 and 5 later.

Writing & Debugging Code

The choice of tools to design, write, compile, and debug code is usually based on the technologies chosen to create the system. If you are creating a web application using HTML, React, JavaScript, Apache, Tomcat, NodeJS and Postgres you will need a different set of tools than if you are developing the same application using HTML, Angular, JavaScript, IIS, Microsoft .NET, and SQL Server.

However in general you will want an Integrated Development Environment (IDE) that supports the programming languages you will be using (sometimes you will need multiple), with a compiler that builds for the platform you are using, debugging tools to let you step through the code when things don’t work as expected, and design tools that make documentation, refactoring and understanding code easier.

SpiraTeam includes add-ons for the most popular IDEs include Microsoft Visual Studio, JetBrains IDE family, and Eclipse. This lets developers work in their IDE to see the requirements they have to build as well as the code they are writing, all in the same place.

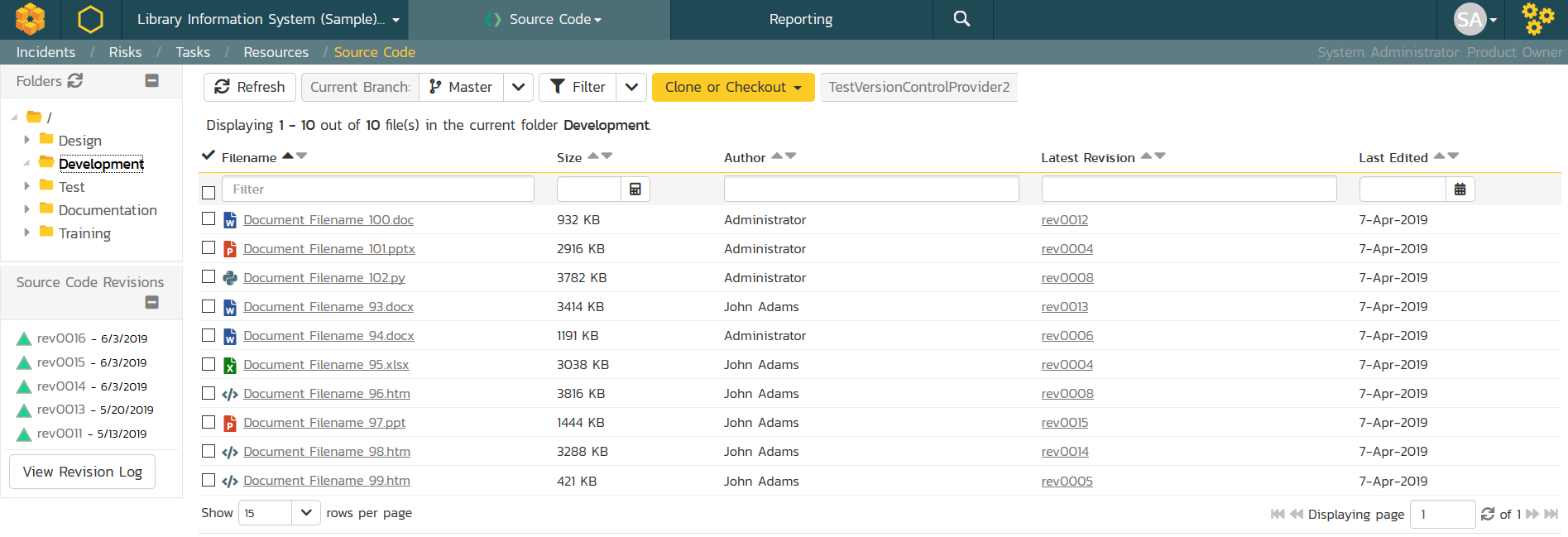

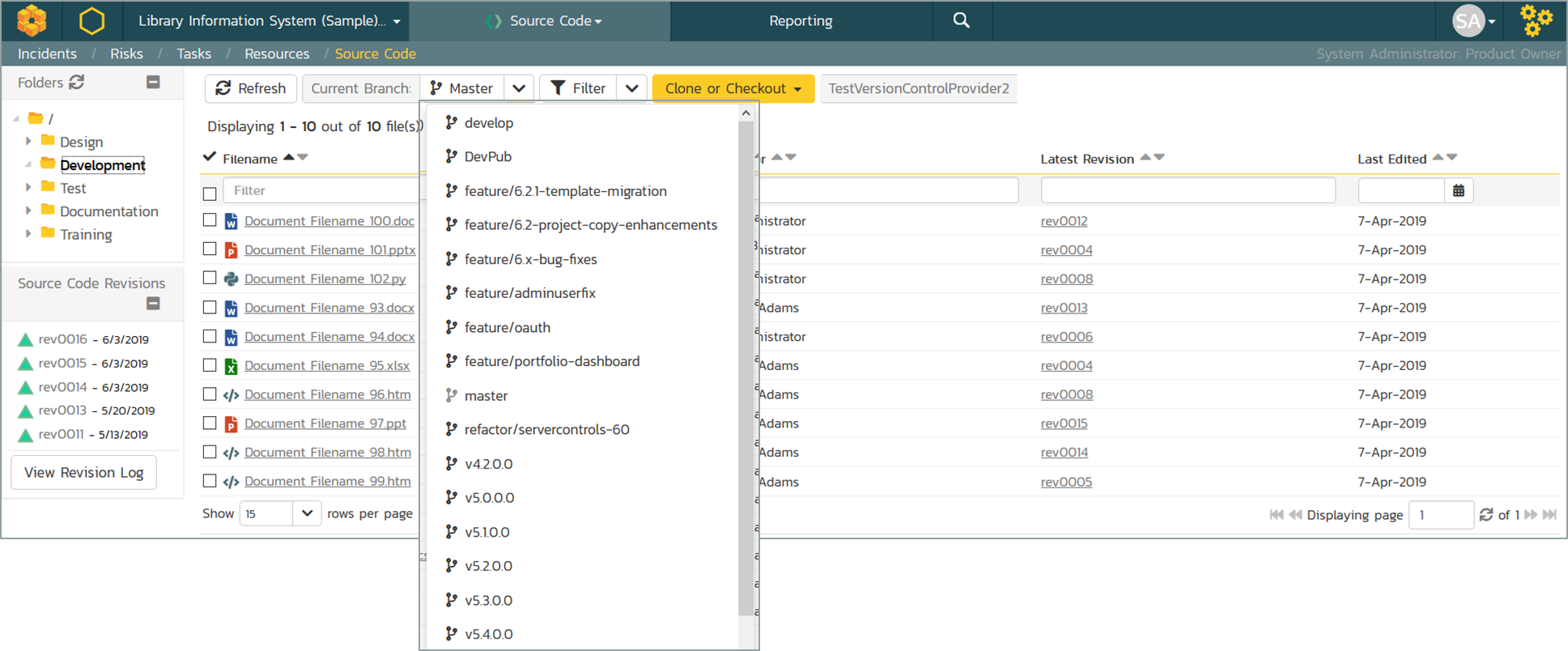

Managing & Versioning Code

The code used to create an application is the lifeblood of most software businesses; and is valuable intellectual property (IP) for any organization that develops software. Consequently, you need to make sure you have a robust set of tools and work practices to store and manage your source code. TaraVault® from Inflectra provides an enterprise-grade Source Code Management (SCM) solution based on the two-leading industry standard platforms – Git and Subversion.

The choice between Subversion and Git will depend on your work needs, if you have a small team and you primarily work in a small number of code branches, and your merging needs are limited, Subversion is often simpler to deploy and manage. For larger (or geographically distributed) teams, where you need to have multiple work streams in parallel, with lots of branching and merging, Git is by far the superior solution. TaraVault® lets you choose the repository type (Git vs. Subversion) per-project, giving you the most flexibility.

In addition to SCM tools such as TaraVault, you may also have a need for code analysis, code reviewing and code documenting tools that can automate or streamline code reviews, documentation, and security/performance analysis of code prior to deployment.

Building & Integrating Code

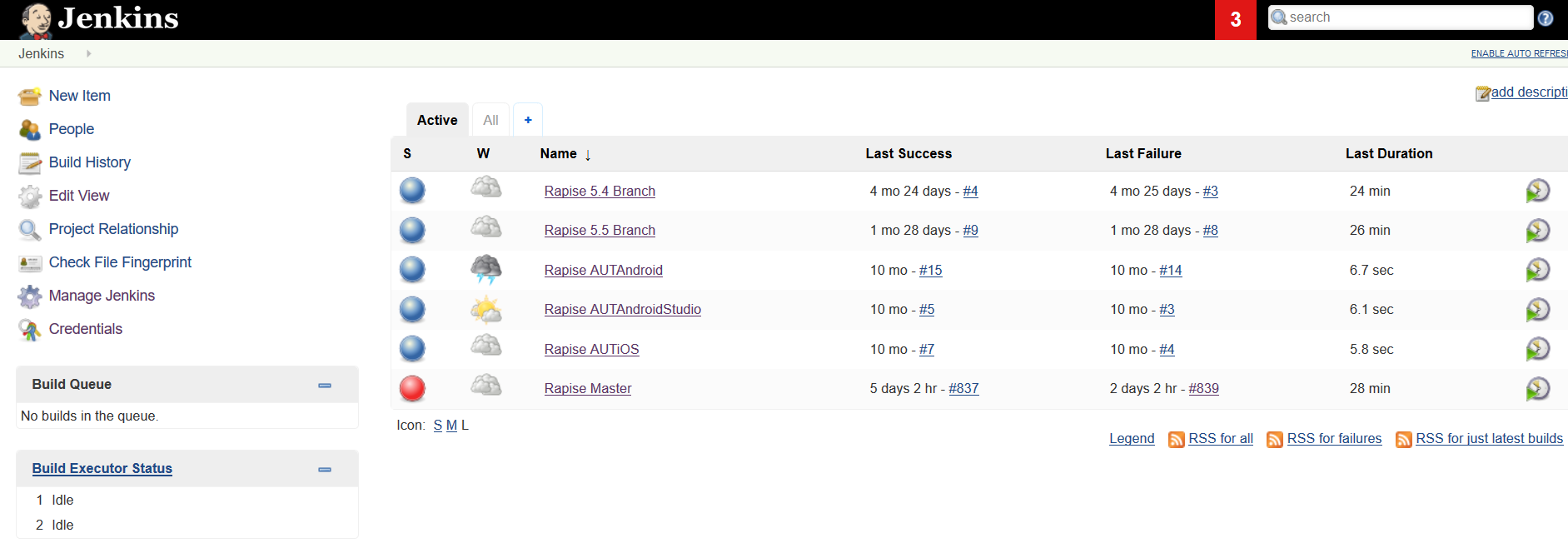

The final aspect of the development side of DevOps is the ability to quickly and easily build and integrate the software code from different developers and teams. Tools such as Jenkins, Hudson, TeamCity, Travis, and Microsoft TFS let you create different build pipelines that tie together all the tasks necessary to build the final software product.

You should make sure that your chosen Continuous Integration (CI) build tool works well with the rest of the DevOps toolchain. You should make sure that there are extensions that support your code compilers, IDEs, SCM tools, databases, and deployment processes. Ideally, you should make sure the CI tool can report back success/failure and diagnostics to your ALM suite.

In addition, many CI tools can execute jobs that orchestrate other parts of the DevOps toolchain, such as automated testing, staging, deployment, and notification.

3. Test / Verify

The testing and verification part of the DevOps toolchain is there to mitigate technical risks and provide information that lets the management understand the quality of the software that has been developed and provide metrics and indicators that determine whether it is ready for deployment and production. Where practically possible, we recommend that you use a suite of continuous testing tools and processes that provide real-time feedback on the technical risk in the system.

This is often called Quality Engineering (QE) rather than Quality Assurance (QA) because it encompasses so much more than traditional testing.

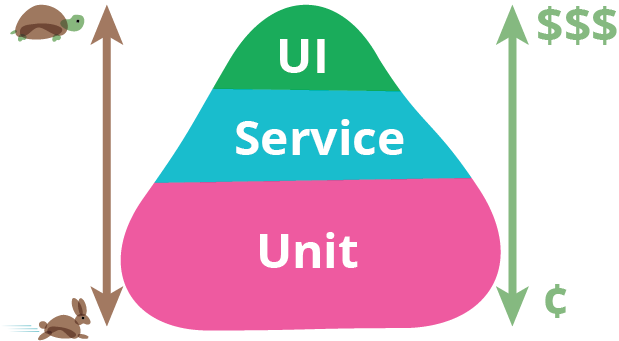

There is always a tradeoff when it comes to testing and managing quality in terms of the time spent to write tests and checks, vs. the time saved running them. A common metaphor is the “testing pyramid”:

We shall discuss each of the different types of testing, however for a fuller discussion, we have several whitepapers on Testing Methodologies and Automated Testing that provide more detail.

Unit & Integration Testing

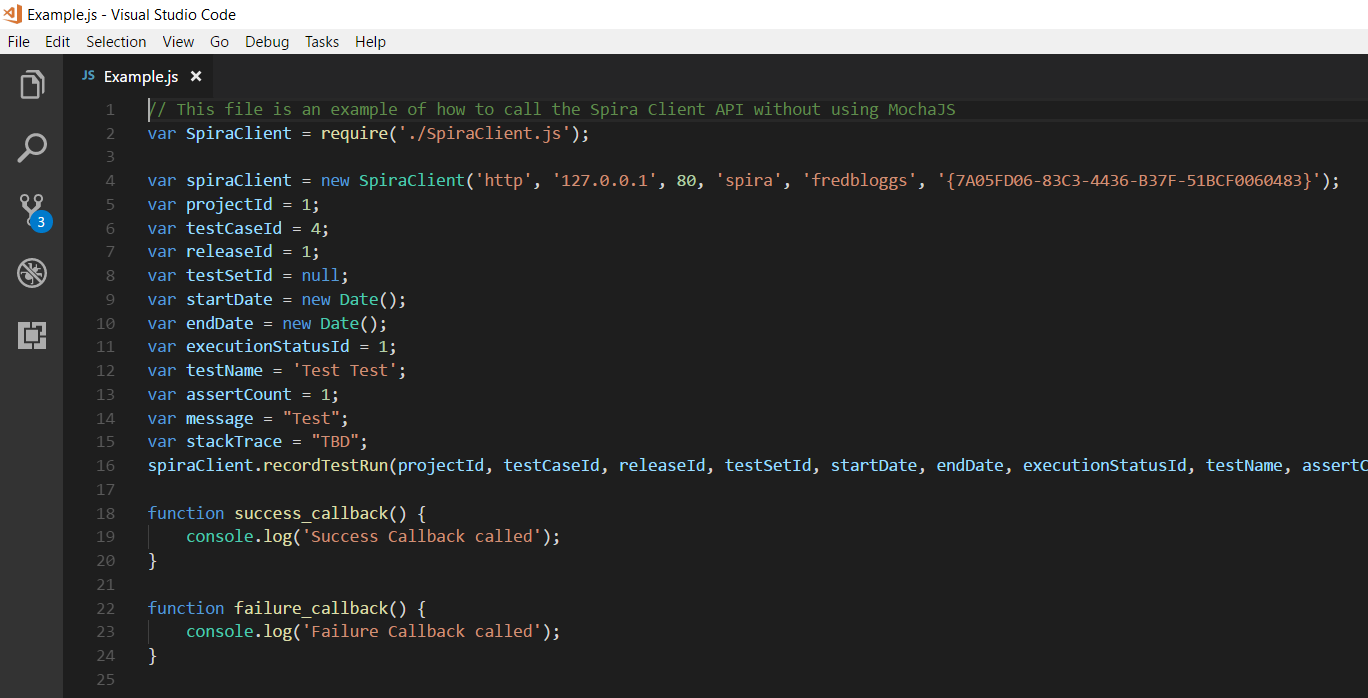

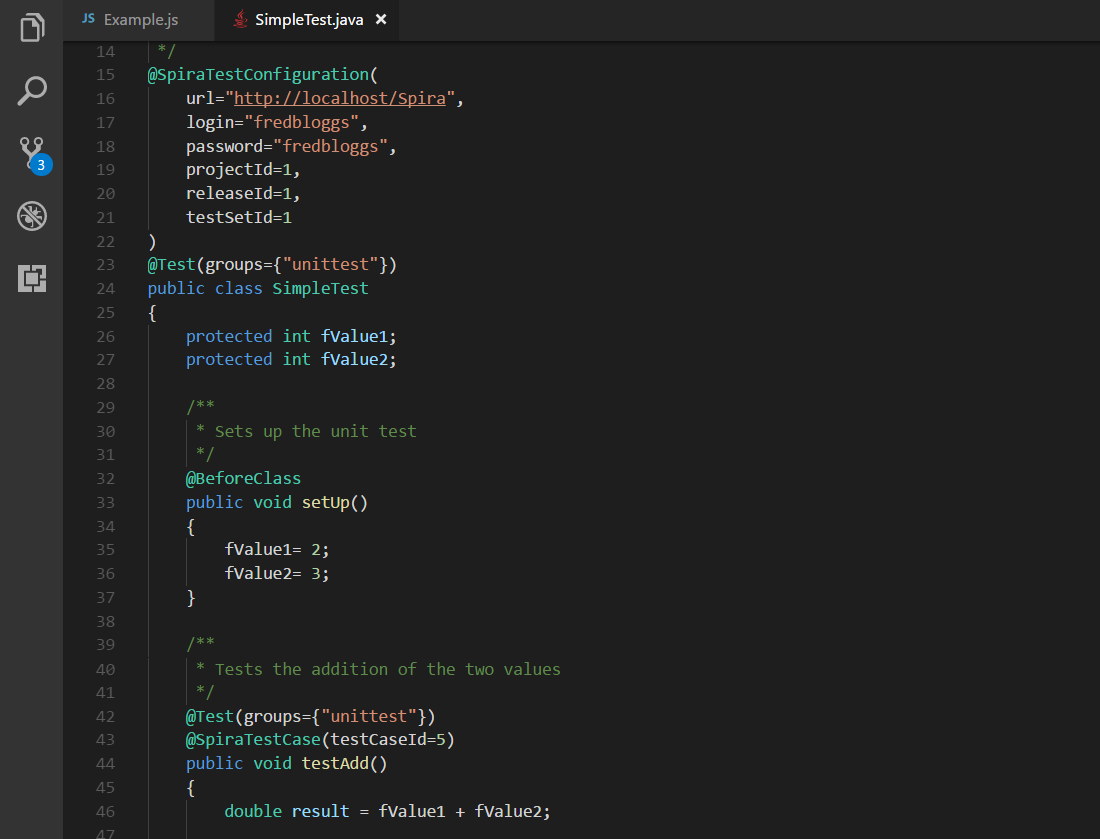

The developers are typically responsible for testing their own work, and most organizations using agile methodologies will practice Test Driven Development (TDD) to ensure that there is good test coverage of the code and that developers are not just testing the “happy path”. Ideally you should also use the same unit testing frameworks (which are based on the language being developed with – jUnit or TestNG for Java, or UnitJS / Mocha for NodeJS for example) for automated integration tests that test multiple modules of code at once.

SpiraTeam provides plugins for the most popular unit testing frameworks so that the results of the automated tests are visible directly inside your requirements and test management platform for risk analysis. You should make sure your chosen ALM solution supports your unit test frameworks.

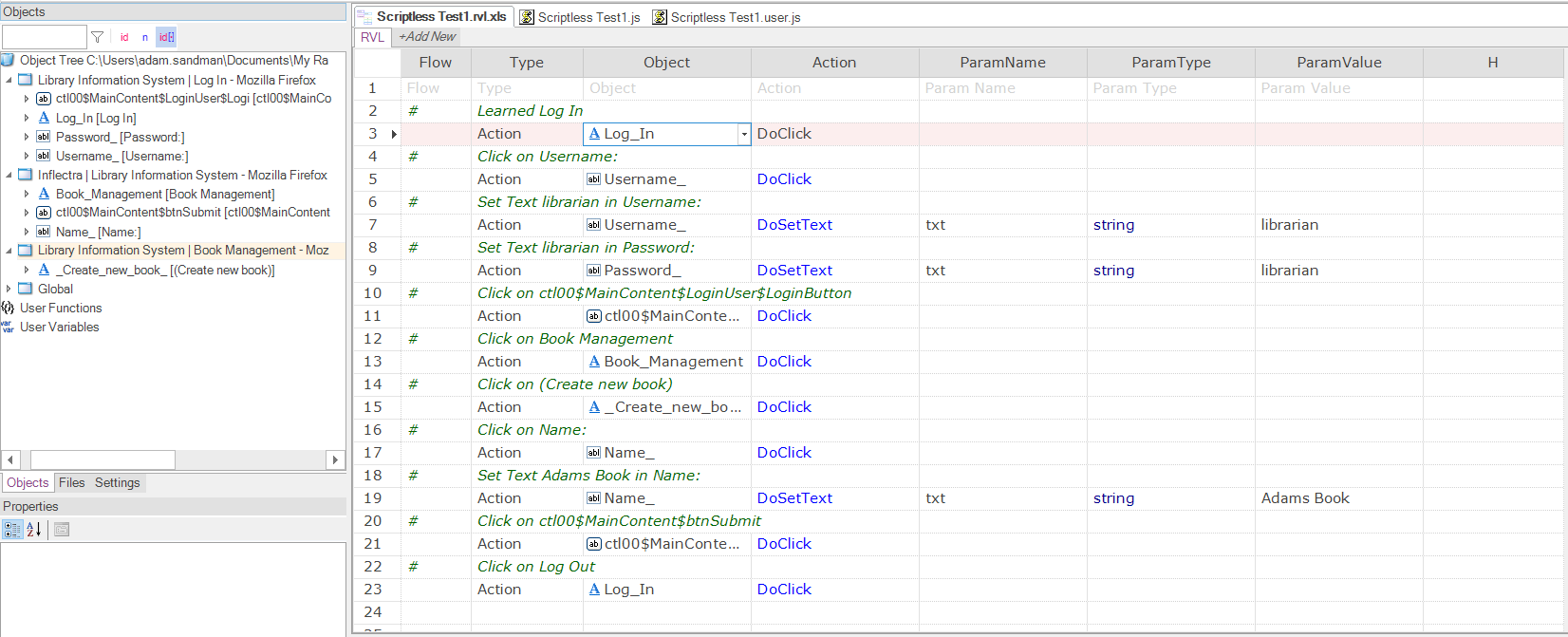

User Interface Testing

In an ideal world, you could test all of the logic and business rules without needing to test the user interface (UI). Unfortunately, the UI is a key part of most systems, and issues in the UI will degrade customer satisfaction, damage customer loyalty, and greatly impact the business overall.

However, it is often the most frequently changing part of the application and the hardest to test. Make sure you have the appropriate tools for testing the type of UI you are using (web, mobile, desktop, console, etc.) and that you understand which parts of the UI should be automated (e.g., changing less frequently and need testing with lots of data permutations). We have some whitepapers that provide guidance on this topic.

Rapise is a powerful automation tool that integrates with SpiraTeam to enable you to automate the testing of web, mobile, desktop and other applications faster than coding by hand, and providing a scriptless interface that lets the business / functional users collaborate more easily with the automation engineers.

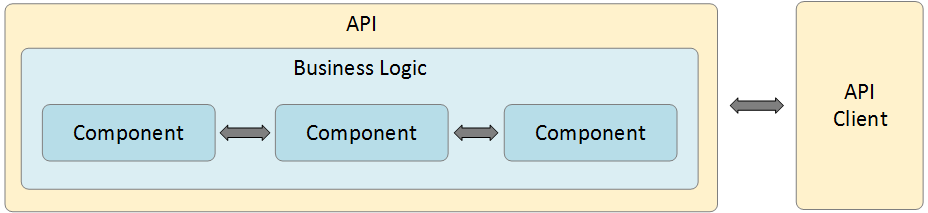

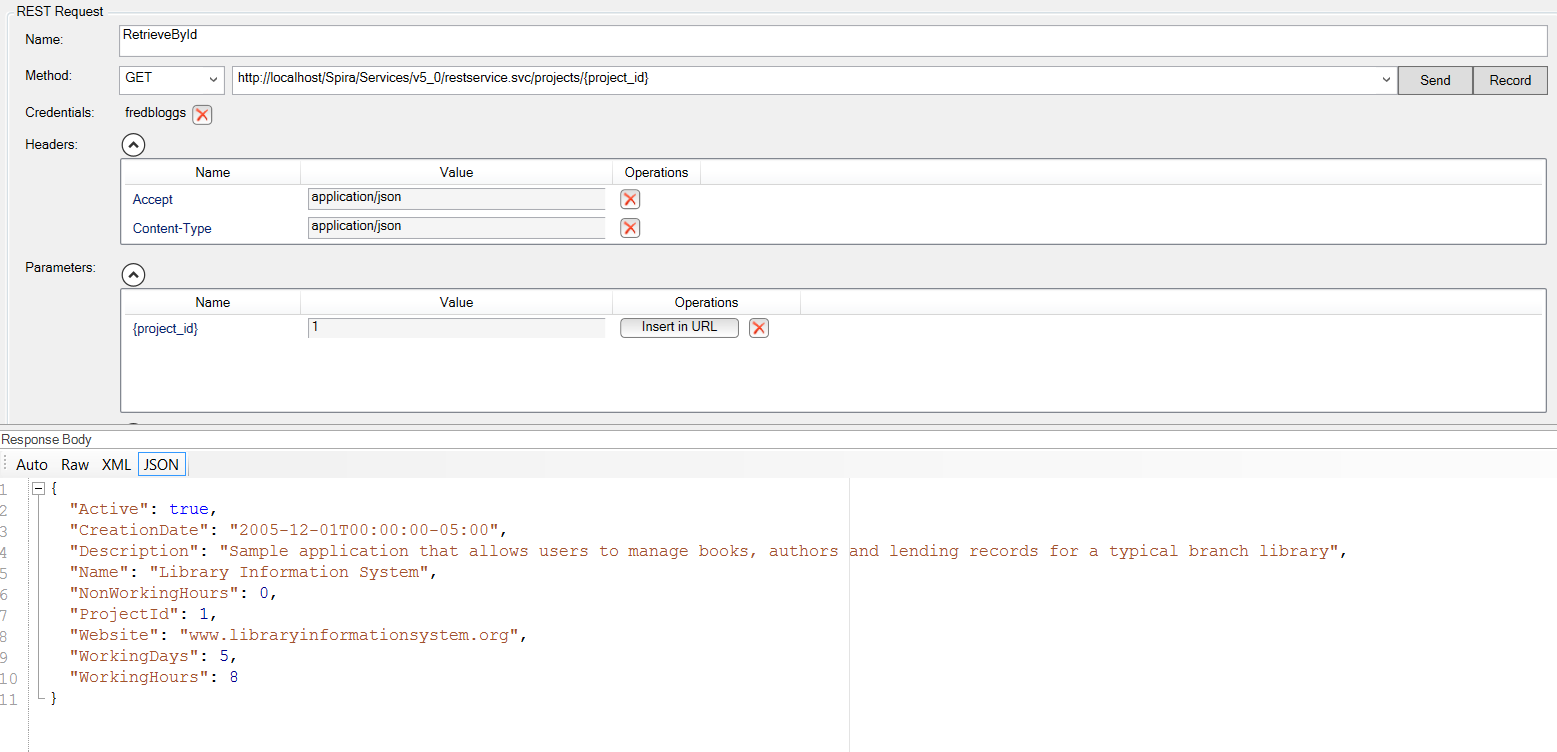

API Testing

Often an afterthought, testing your Application Programming Interfaces (APIs) is vitally important if you intend for your product to be part of an ecosystem. The days of monolithic applications are limited, and if you have well-maintained, versioned, tested APIs, it can be a big differentiator for your products. After all, external developers would prefer to not have to rewrite their code every time you ship a new version of your product.

Rapise includes powerful tools for testing both REST and SOAP style APIs, with integrated reporting back into our SpiraTeam ALM suite. We recommend making sure your API testing tools integrate back into your DevOps toolchain and ALM platform.

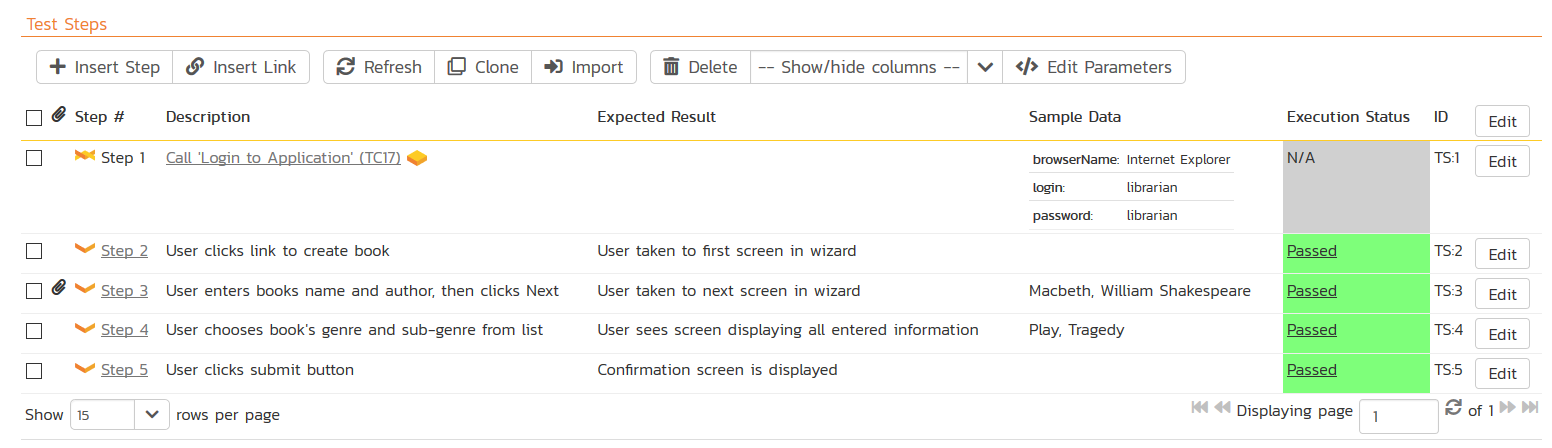

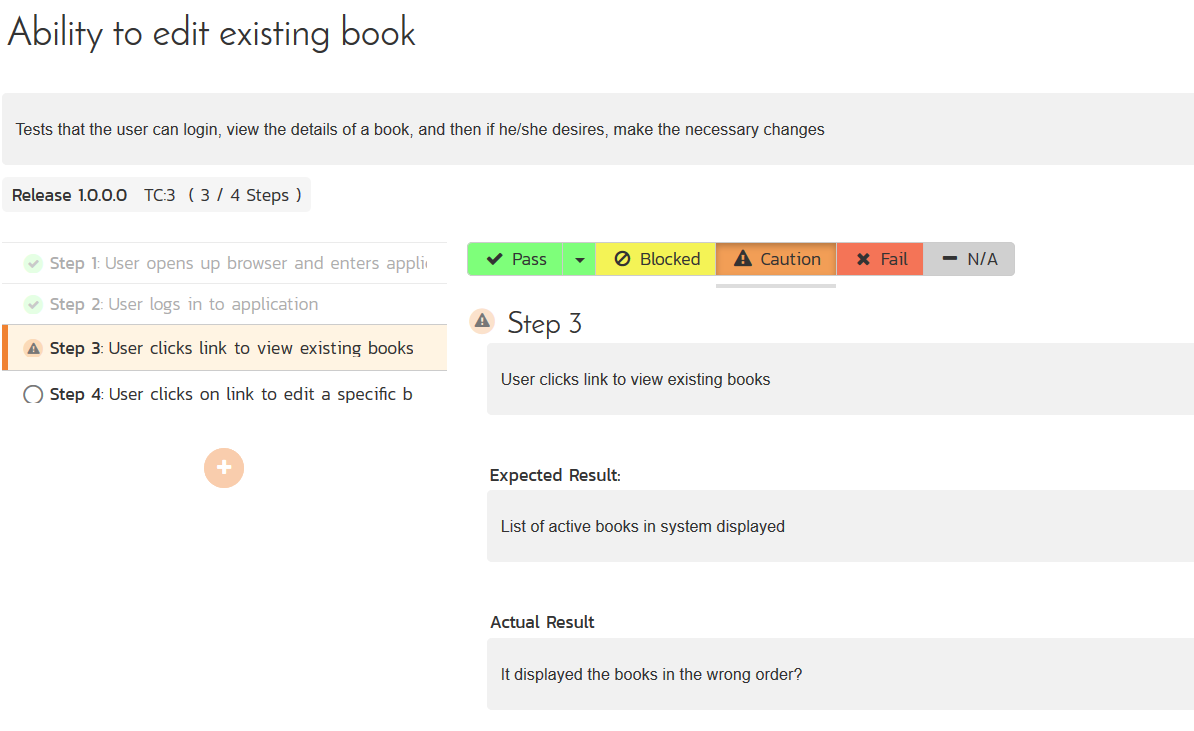

Exploratory & Manual Testing

Despite what is often claimed, you will not catch all issues with automated tests, you need to have skilled human testers involved with looking at the application during development (as well as afterwards). They act as the end users’ advocate and will save you from making your users act as your unpaid and (sometimes vocal) testers.

Traditional scripted manual testing or User Acceptance Testing (UAT) is often used in industries where there are significant regulatory and or legal requirements to have users test every path, otherwise you may want to focus more on freeform, unscripted, exploratory testing, where the testers are to follow their own path and intuition to see where issues may have been overlooked.

SpiraTest (which is part of SpiraTeam) provides world-class support for both traditional test case-based manual testing and freeform exploratory and session testing. Make sure that your test management solution will support the types of test activity that you will be performing.

Performance & Security Testing

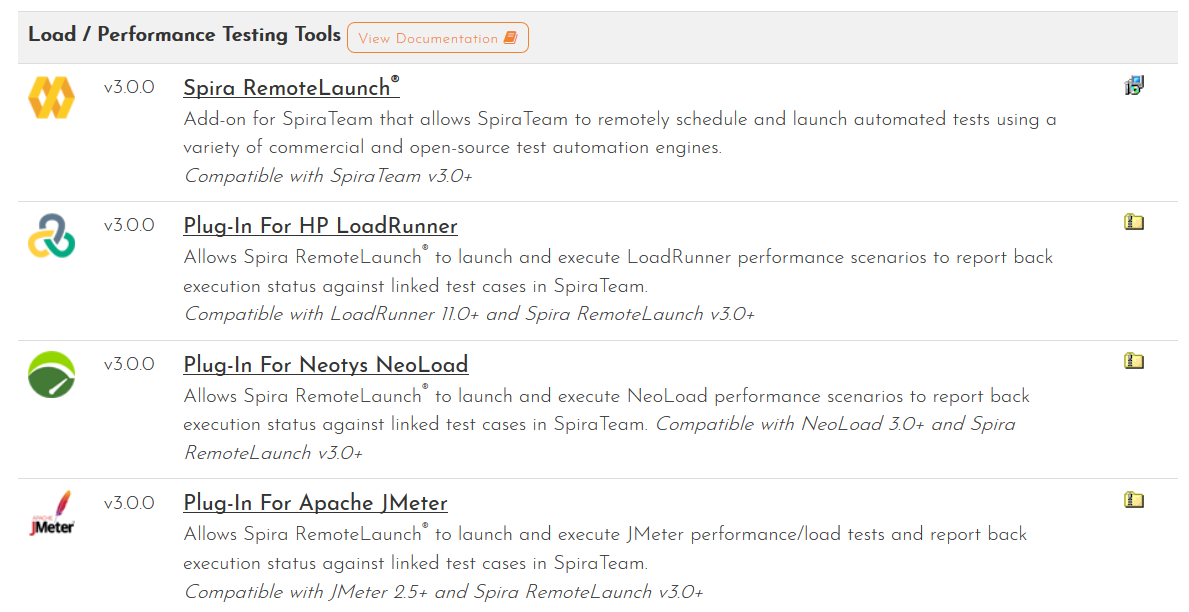

In addition to verifying that the product works as intended, you will usually need to test other aspects such as performance (how well it works under different user loads) and security (how vulnerable it is to security penetration). They are out of the scope of this whitepaper, but you should make sure that tools you choose for your ALM and DevOps toolchain will interoperate cleanly with these tools. For example, SpiraTeam integrates with several different load testing tools (NeoLoad, JMeter, and LoadRunner).

Depending on your industry and the nature of the application, there may be other specialized forms of testing needed (e.g. Section 508 testing for accessibility, Sarbanes-Oxley, FDA Testing, etc.)

4. Package

This aspect of the DevOps toolchain is vitally important but is often overlooked. You need to be able have a repeatable, reliable process for taking all of the built and integrated code, documentation, data, and other artifacts that are created in your CI build pipeline, and package them for deployment and release.

The strategies and choices that you make will depend a lot on your users and how the software is delivered. The package process for installable software that customers deploy themselves (either on premise or in their own cloud platform – called Platform as a Service (PaaS)) is very different for a Software as a Service (SaaS) model where you control the environment and the customer simply accesses your application through a URL that you provide.

Artifact Repository

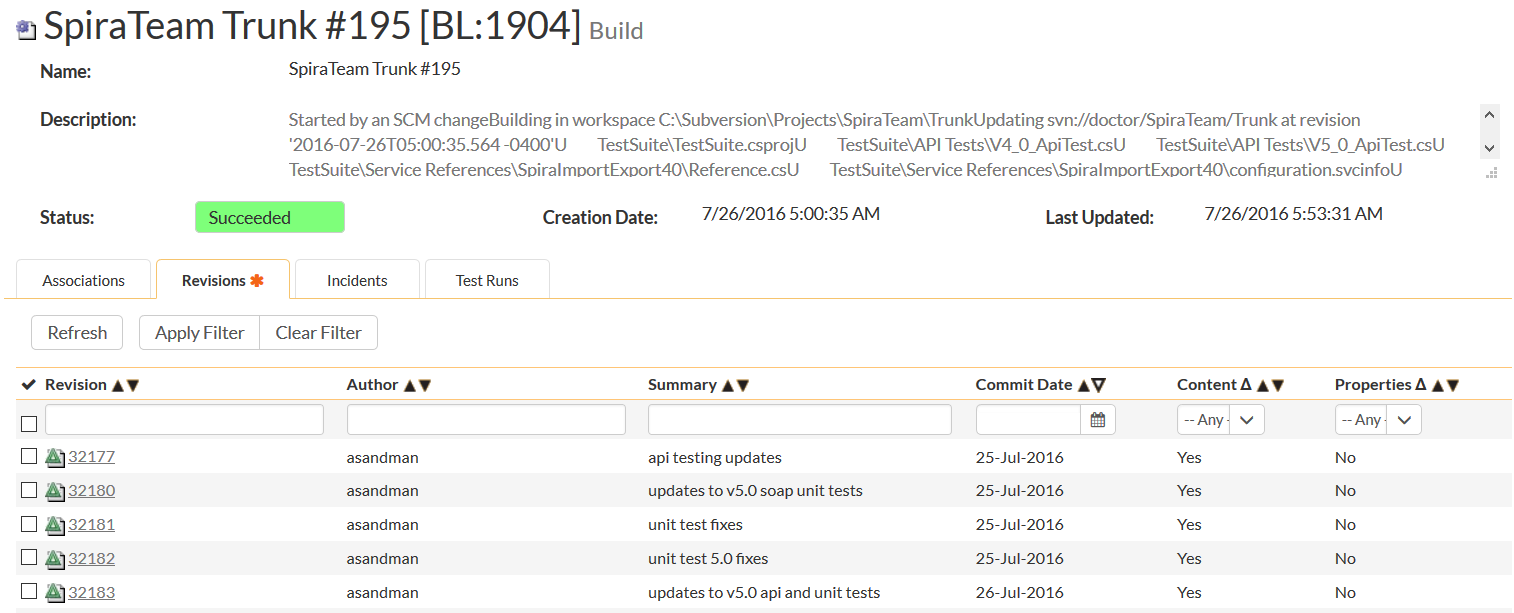

When it comes to packaging, you need to have tools and processes that automate the identification of the changed items in the software and packaging of those items appropriately. For example, if you have a set of user stories, requirements, and bug fixes that are part of the release, you can identify all the source code revisions and commits that are associated to those artifacts, and then pull the appropriate source files (and ideally documentation files) into the final package.

In addition, you can use this artifact repository to auto-generate the release notes, system, API and user documentation so that these tasks don’t result in omission or error. Unfortunately, the generation and packaging of the documentation is often secondary to the binary code, resulting in frustrated users and third-party developers.

SpiraTeam provides a robust API and set of reports that let you auto-generate documents such as the release notes as well as generate the final set of product binary code and installation process files that will be bundled together into the final product.

Cloud Deployment Strategies

For cloud-based deployment, you will need to make sure you can package your software and associated artifacts in a way that facilities easy distribution to your chosen cloud platform, can be rolled-back if there is an issue with its deployment, and reduces the burden on the release process (discussed in section 5). Unlike the on-premise package cases, you have more control over the environment and you know the level of training that your IT staff will have.

However, for cloud environments, you will need to understand how your tenancy model (single vs. multi) will impact the packaging process. For single-tenant systems, your packaging process may be similar to releasing a download product, for multi-tenant systems, you will need to consider tools such as “feature flags” to allow you to deploy to all customers, but only enable for those that are ready.

We provide our SpiraTeam product as a SaaS solution that users sign up for, in this case we are able to package it simply as a set of application files plus a resident installation service that is responsible for provisioning instances of the software onto the target environment (traditional data-center or cloud platform such as AWS). For our Rapise automation tool, we provide it packaged as an AWS cloud image, called an AMI.

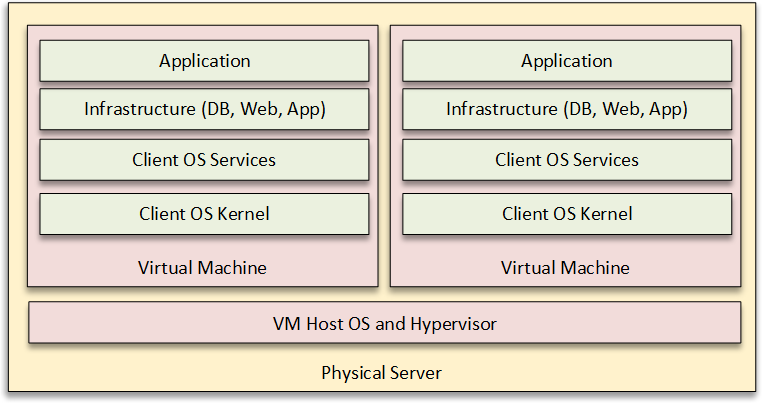

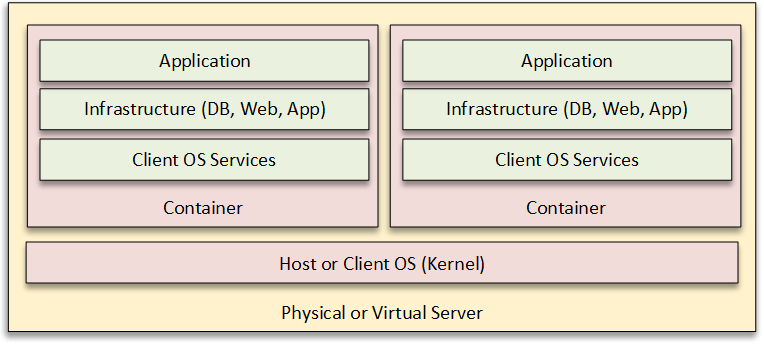

Containers, Images, Applications

When it comes to how to package cloud applications, in addition to simply providing the application as a package that is deployed, you can choose to package up a copy of the entire operating system, pre-requisite services (e.g. web server, database server) and your application. This is often called a “machine image” or virtual machine (VM) image. This has the advantage of being the quickest to deploy and restore, however it results in significant duplication of the stack, incurs additional license fees, and makes maintaining the OS and infrastructure costlier.

As a consequence, another strategy is to containerize your application, which is to package the application and a minimal set of infrastructure services into a single unit (called a container) that can be deployed multiple times in the same Operating System machine image.

This promises to give the deployment and scalability advantages of virtual machines, with reduced infrastructure overhead.

On-Premise Strategies

When it comes to deployment on-premise, you have to determine how to combine all of the build artifacts into a single package that can be easily delivered to the customer. The final package will ideally be self-installing (e.g. Microsoft Installer (MSI) for Windows servers) with the ability for the IT staff to configure the installation for their environment, understand the necessary pre-requisites, and have the appropriate documentation for them to be able to deploy and maintain your package.

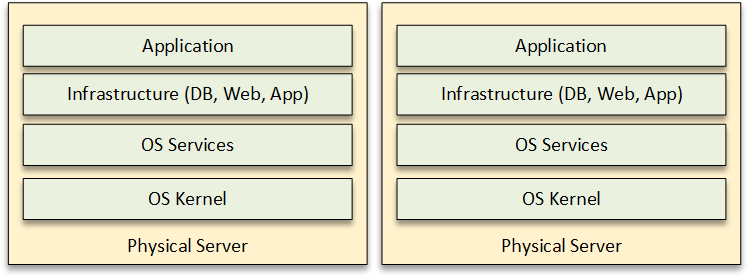

Alternatively you can simply create a Virtual Machine (VM) that can be deployed as a single image onto your on-premise virtualized infrastructure:

Our ALM product SpiraTeam and our automation tool Rapise are available for on-premise use, so we have first hand experience of some of the pitfalls. The main areas you should focus your attention on are: how the installation package will handle missing pre-requisites, upgrades from older versions, incorrect platform versions, and atypical security or environment settings that may affect the installation.

5. Release

When it comes to the release part of DevOps, there are several different components that need to be considered:

- Release Management

- Configuration Management

- Change Management

- Deployment Automation

Release Management

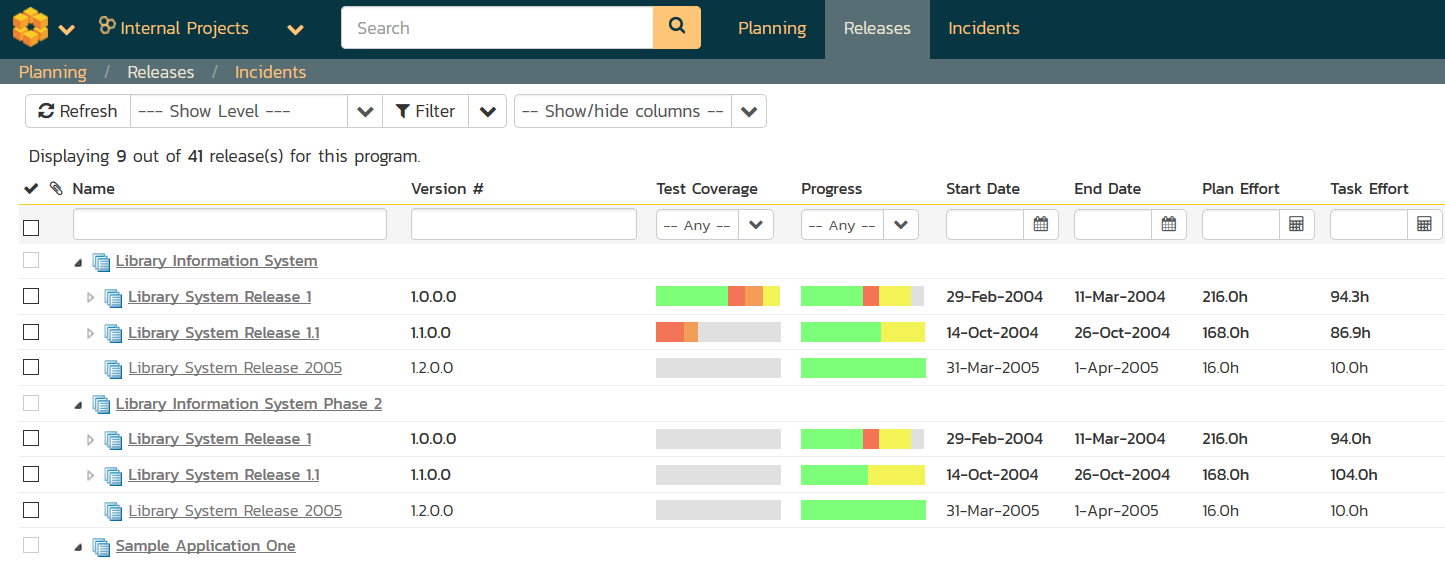

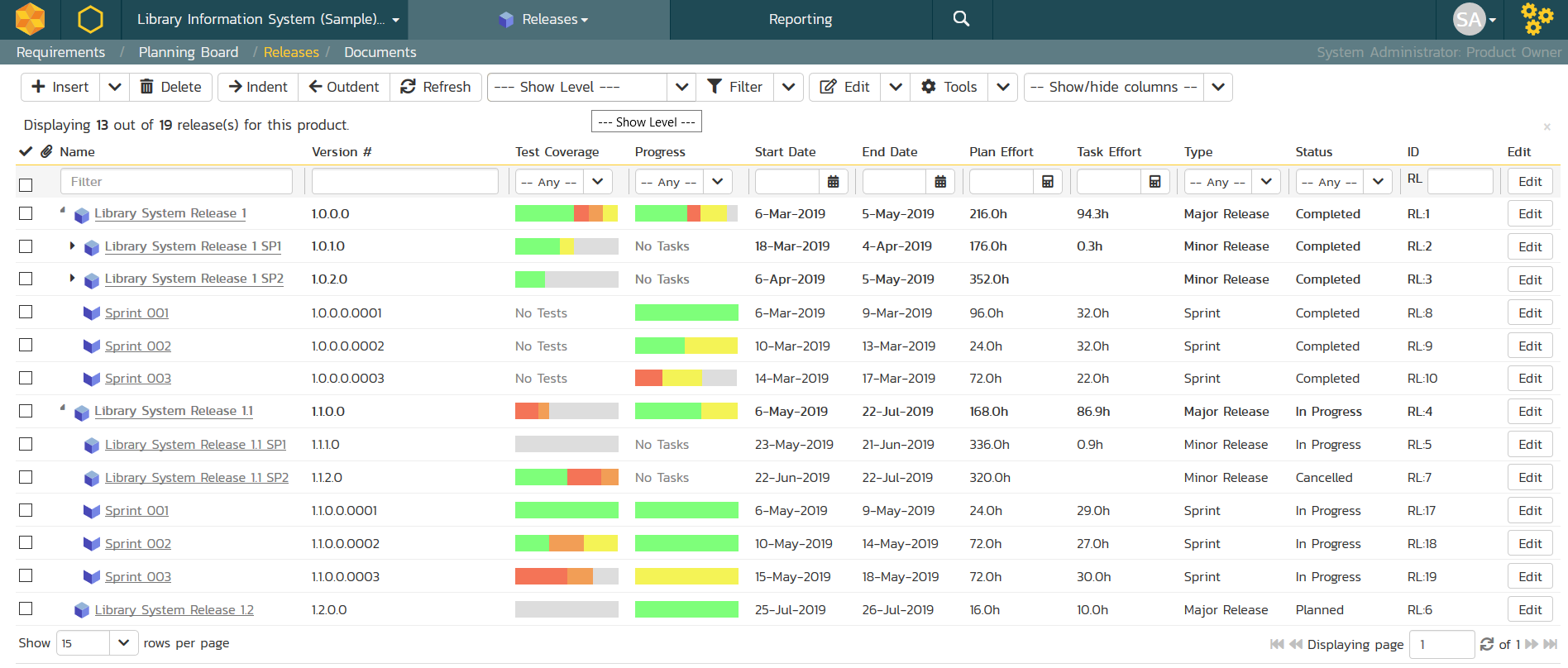

This is the foundation of the release aspect of DevOps, you need to have a way to describe, document and manage all of the different versions of your software. Depending on your software lifecycle and release tempo, your approach to release management will differ.

You might be performing continuous delivery whereby each build and user story is immediately released into production, or you might follow a more gradual approach, where a set of requirements from one or more sprint is packaged and released as a single atomic unit.

Regardless of your approach, you need to have a way to document what releases are planned (and a history of what is already released), what functionality is contained (requirements, user stories, defects, bugs, etc.) and what packages are to be deployed (from the Package phase of DevOps). You should ideally have a way to automate this process, so that is a feature is removed at the last minute, there is a way to “recalculate” in real-time what assets (code, tests, documentation, etc.) are to be removed from the release.

For some customers, once a release is complete, you can move on and focus on the next release, however in some industries (e.g. Healthcare, Government, Banking), you may need to manage multiple release “baselines” in parallel, with the ability to compare release baselines and audit all changes.

When choosing a release management tool, make sure you understand your process and what your needs are. Some tools provide release baselining support, but it may add complexity and overhead if you don’t have a way to disable the feature.

Similarly, some customers need a formal release approval workflow, with signatures, signoff, and auditing; other customers can simply create a new release and deploy against it immediately. SpiraTeam has a built-in release workflow system, but it can be disabled for customers that need a more fluid, less process-driven approach.

Configuration Management

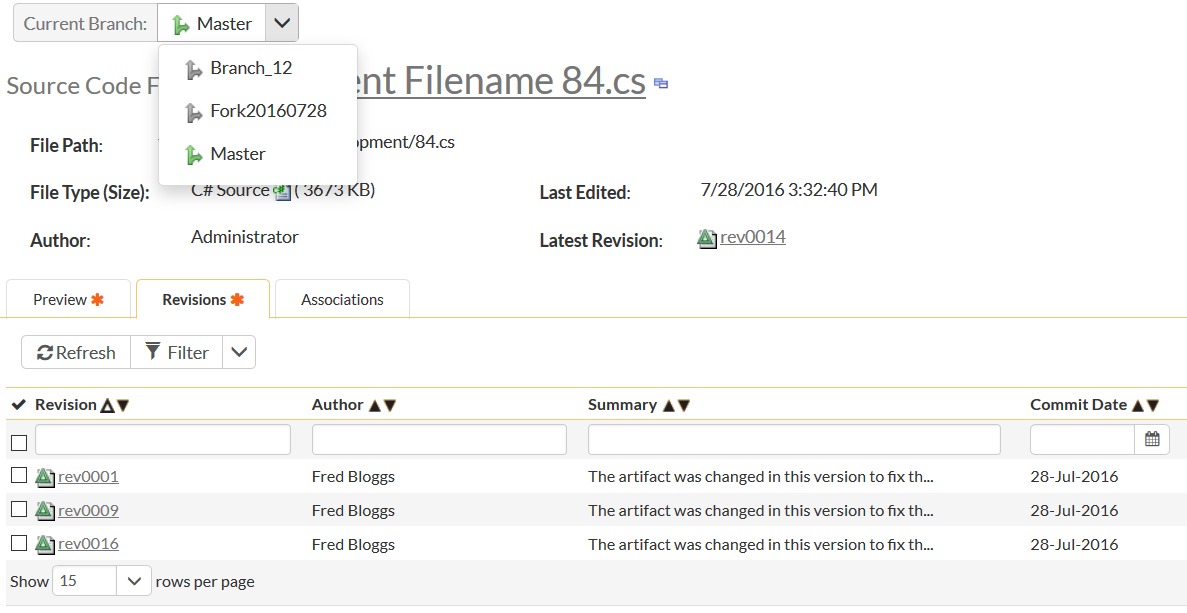

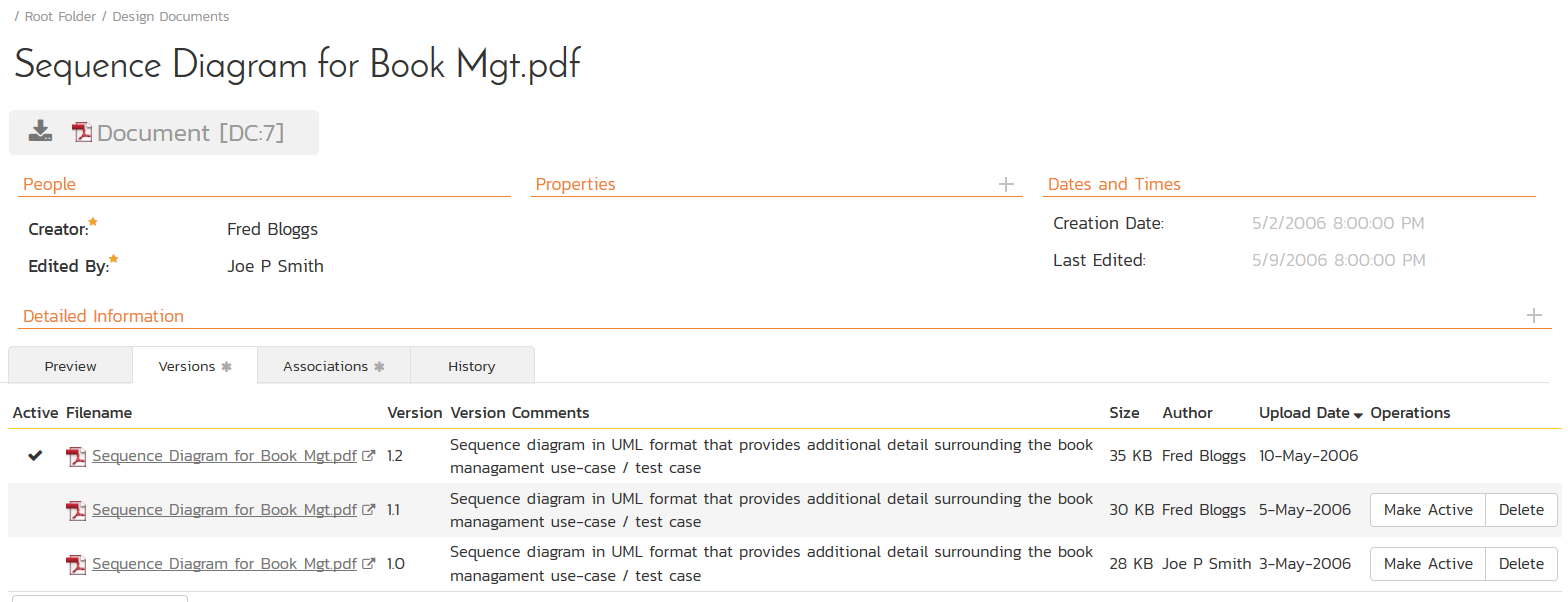

This is an area of the toolchain that is similar to the source code management features discussed in section 2. Once you have the release plans developed, you often need a way to maintain a history of the different configurations of the product.

For some customers, it may be primarily the source code, documentation, packages, and artifacts stored in the SCM system, but for customers in more regulated environments (Hospitals, Defense, Industrial Systems, etc.), they need to maintain an inventory of all the items related to each release. For example, the requirements, tasks, defects, issues, and associated design artifacts may need to be versioned and tagged with each release branch. Depending on the type of information that you need to manage, you may simply use your SCM tool to manage the additional items or use your ALM tool (if it supports configuration management).

In addition, you should define your configuration branching and merging strategy. Do your teams work in multiple branches that are then integrated into a “master” or “trunk” branch, or do you have primary development in the master branch, with patches and hotfixes solely in the other branches.

Change Management

Related to configuration management, change management is the management of changes to the final system. It includes bug and issue tracking, as well as the approval of change requests and enhancements to the product. You should make sure that you have a well-documented change management process that is agreed by management and aligns with any quality or security standards that your organization follows (e.g. ISO 9001, PCI-DSS, SSAE 16, etc.).

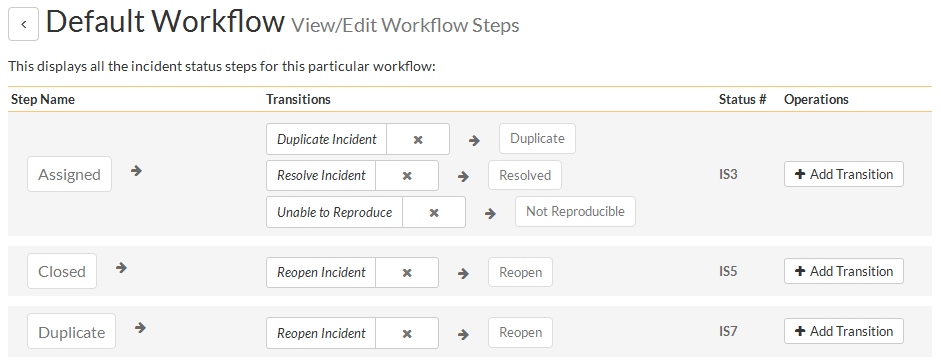

Some change management strategies are very lightweight, essentially being a simple bug or issue triaging workflow. Other strategies may be more complex, with the need for multiple-levels of approval, external audit steps, and regulatory oversight (e.g. FDA validated systems). You should make sure that the tools you use for change management include the necessary functionality to handle your process.

For example, SpiraTeam has built-in support for change management with simple workflows for most users, that can be customized to include robust approval routing, signoffs and electronic signatures (where needed).

Finally, you should make sure you have a well-defined production environment release and communications strategy, so that your users know and expect when product updates will be released, how the changes will affect them, and if you will have a standard or ad-hoc maintenance outage window (if the changes will necessitate a planned outage).

Deployment Automation

The last aspect is automating the deployment of the release itself. For cloud customers this may mean pushing the package to a production environment, applying the changes, initiating alerts and notifications to the users, and/or changing a feature flag to enable the functionality.

For on-premise customers, it might mean uploading an installation package to a customer portal, emailing it to customers or having an automated update process on their environment pickup the package from the central update service.

Whatever the process, having it as automated as possible, and integrated into the other parts of the Release process (release management, configuration management, and change management) will reduce the likelihood that avoidable errors will creep in. Tools on the market such as Octopus can automate these steps, integrated with your CI tools and ALM platform.

6. Configure

This part of the DevOps toolchain refers to the trend in computing away from large, fixed infrastructure resources that require large upfront investment and long lead-times, to flexible, on-demand provision of infrastructure.

The first phase in this revolution was the move from physical servers running a single Operating System (OS) to virtualized environments such as VMware or Microsoft HyperV that let you run multiple machine images on a single physical server.

The second phase was the move to Infrastructure as a Service (IaaS) platforms such as the Amazon Web Services (AWS) Elastic Compute Cloud (EC2) that turns the process of provisioning a new server from a month long purchasing and installation project, to a five-minute task that can be done by a click of a button, or a REST API call.

The third phase is often called Infrastructure as Code (IaC) where you can design, implement, and deploy applications infrastructure completely through code, the same way that you can control databases, web services and existing software infrastructure.

The final phase is called Continuous Configuration Automation (CCA) and is made popular by tool such as Chef or Puppet. It lets you change, configure, and automate infrastructure provisioning in the same way that Jenkins and other CI tools let you automate the building of software packages.

7. Monitor

Putting the deployed packages into production and configuring the infrastructure is not the end of the story. One of the most important parts of DevOps to the business is having a robust set of monitoring, tracing and event reporting tools to ensure that unanticipated changes are detected and remediated before they impact the end users.

There are several different aspects of monitoring that need to be considered, including technical monitoring of the environment, security monitoring, end user experience monitoring, performance monitoring, business event notifications and trend analysis, and predictive maintenance. In addition, end user support is an important part of the DevOps chain, closing the feedback loop with the customers and users. We group these into three categories:

System Monitoring

When it comes to system monitoring, you usually need to ensure you have real-time automated monitoring of your systems and infrastructure that covers the following:

- Functional Monitoring – are all the systems working correctly, are the APIs available, do you have any outages. You can build your own tools (e.g. calling a sample of your APIs) or use commercial monitoring tools and services. You need to make sure you are testing realistically, for example having the testing tools outside your network to ensure network equipment failures are being monitored.

- Performance Monitoring – is the system responding normally to the current user load. Based on prior performance testing, is the system near its expected operational load and additional resources should be provisioned pro-actively to be ready to handle potential additional load. For example, tools such as Dynatrace allow real-time synthetic monitoring.

- Security Monitoring – is your system secure, with no exploitable vulnerabilities or active cyberattacks. There are a variety of commercial (and some limited open source) tools that can scan for vulnerabilities, perform penetration testing, monitor intrusion detection, and detect unusual network patterns that could indicate a cyberattack. For example, tools by Saint, and SecurityMetrics can be connected into your monitoring platform.

Business Monitoring

In addition to monitoring of your systems, it is important to tie in business metrics to your DevOps monitoring platform. Sometimes business events will be the early markers that act as the “yellow canary in the mine” alerting you to a more serious technical issue.

For example, if your e-commerce platform shows a 20% drop in orders compared to a similar period the previous week, there could be a usability or performance issue (or partial outage) that has not yet been formally detected. There are a variety of free tools such as Google Analytics that can monitor the real-time behavior of your users, plus several commercial applications that can monitor and alert based on business transactions and other metrics.

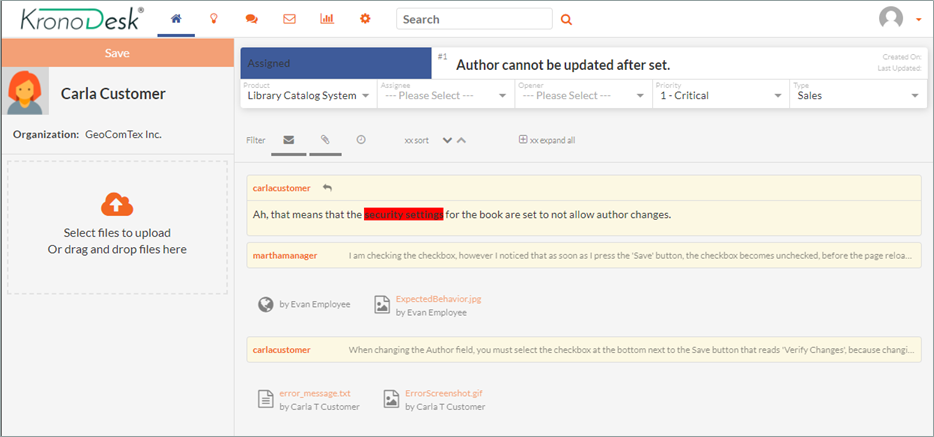

User Monitoring

Your users are also an important part of your monitoring process. A good best practice is to make sure that the tools used to support your end users are fully integrated into your monitoring environment. For example, your help desk system can be used to generate metrics around number of help desk tickets per package. If you see a spike in the number of help desk tickets about one area of the system, that is a good indication that something may have inadequate test coverage, or incorrect configuration for the current load.

Beyond simply providing insight into the stability and usability of your system, feedback and ideas from your users are a critical resource that should be fed back into the start of the DevOps toolchain. When planning your next release (see Section 1: Plan), feedback from your users that came in from your support team and help desk can inform future functionality.

Conclusion

The marriage of agile methodologies, virtualized and cloud computing, and on-demand infrastructure has changed the process for creating and managing software and IT resources. The ability to capitalize on DevOps will depend to some degree on the specific technologies and regulatory constraints on a specific business or application, but all organizations can benefit.