Contextual Considerations

Many times, people interpret the turnaround time differently. It could indicate lead time (new to closed) that includes the response time (new to work starting) and cycle time (work starting to closed). Sometimes, the turnaround time may eliminate the response time and only focus on cycle time because there may be other factors prioritizing what incidents were triaged to be important during that release cycle. So, it is important that one understands the context.

In this article, we will consider turnaround time to be the lead time. That is, from the time an incident is created (new) to the time all work on that incident is finished (closed).

Setting up data

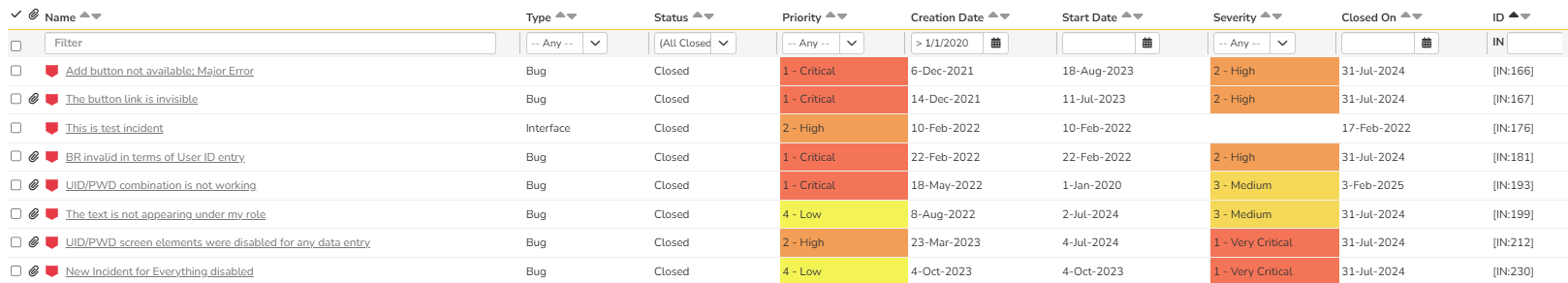

Given below is the data showing 8 records.

Graphical Illustration

In order to demonstrate how to build this query, let us build the SQL query in an agile fashion - iteratively and incrementally! You can see in the header of the CREATION DATE column that I have applied a filter to show incidents after Jan 1, 2020.

SQL Query - Part 1

Given below is the SQL Query.

select

R.INCIDENT_ID,

CASE WHEN R.SEVERITY_NAME is null

then "0 - Unassigned"

else R.SEVERITY_NAME end as SEVERITY,

R.NAME,

R.CREATION_DATE,

R.CLOSED_DATE,

CASE WHEN R.INCIDENT_STATUS_NAME = "Closed"

Then DiffDays(R.CREATION_DATE, R.CLOSED_DATE)

else

0

end as TAT_AS_LEAD_TIME

from SpiraTestEntities.R_Incidents as R

where

R.PROJECT_ID = ${ProjectId} and

R.IS_DELETED = False and

R.CLOSED_DATE IS NOT NULL and

Year(R.CREATION_DATE) > 2020

Query - Part 1 - Explanation

- As part of the defect triaging, the severity may not be immediately assigned. So, it is possible that the incidents reported may have an empty severity rating. To address this issue, we have the CASE statement to assign a '0 - Unassigned' for empty severity rating.

- DiffDays is an ESQL function that gives the difference between source and target dates as a number of days elapsed. Since we are using lead time, we use (R.CREATION_DATE, R.CLOSED_DATE). If cycle time is considered eliminating response time, then, use (R.START_DATE, R.CLOSED_DATE) and ensure that start_date is not null.

- On the result set, apply the where clause to eliminate deleted records and the closed_date not being null.

- For the specific dataset that I had, I applied a filter to look for incidents created after 2020. So, Year(R.CREATION_DATE) > 2020 is relevant. Year is a ESQL function that extracts the year from the DateTime format as an integer.

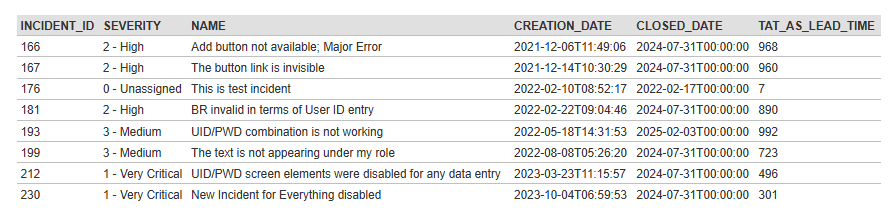

Query - Part 1 - Output

The report below shows that turnaround time computed and has 8 records.

SQL Query - Part 2

Given below is a query that builds on the earlier query to aggregate details for the graph.

select

OQ.SEVERITY, COUNT(OQ.INCIDENT_ID) as TAT_COUNT_BY_SEVERITY from

(

select

R.INCIDENT_ID,

CASE WHEN R.SEVERITY_NAME is null then "0 - Unassigned" else R.SEVERITY_NAME end as SEVERITY,

R.NAME,

R.CREATION_DATE,

R.CLOSED_DATE,

CASE WHEN R.INCIDENT_STATUS_NAME = "Closed"

Then DiffDays(R.CREATION_DATE, R.CLOSED_DATE)

else

0

end as TAT_AS_LEAD_TIME

from SpiraTestEntities.R_Incidents as R

where

R.PROJECT_ID = ${ProjectId} and

R.IS_DELETED = False and

R.CLOSED_DATE IS NOT NULL and

Year(R.CREATION_DATE) > 2020) as OQ

group by OQ.SEVERITY

order by OQ.SEVERITY

Query - Part 2 Explanation

- The inner query comes from the SQL - Part 1.

- This outer query builds on this inner query aggregating the counts by severity.

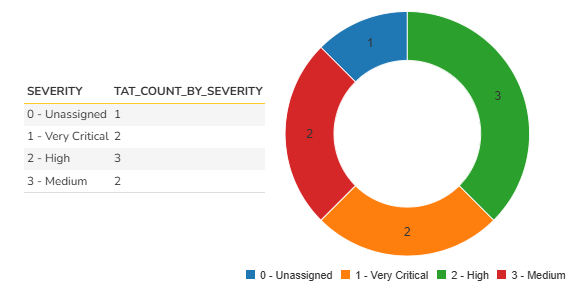

Query - Part 2 - Graph Output

Given below is the graphical illustration. To bring both the result set and the graph together, this graph uses the donut chart confirming the 8 record counts.

SQL Query - Part 3

Now, many teams may find just this information adequate enough. However, the aging of the incidents also may be required as part of the decision-making. In such cases, one may want to report this information by a time period (like less than X days, X to Y days, and > Y days, etc.) In the final query to accommodate this request, we are using less than 500 days, 500-750 days, and greater than 750 days as the time period anchors.

select FOQ.SEVERITY, sum(FOQ.BELOW_500) as BELOW_500_DAYS, sum(FOQ.FROM_500_TO_750) as BETWEEN_500_TO_750_DAYS, sum(FOQ.ABOVE_750) as ABOVE_500_DAYS from

(

(select OQ.SEVERITY, COUNT(OQ.TAT_AS_LEAD_TIME) as BELOW_500, 0 as FROM_500_TO_750, 0 as ABOVE_750 from

(

select

R.INCIDENT_ID,

CASE WHEN R.SEVERITY_NAME is null then "0 - Unassigned" else R.SEVERITY_NAME end as SEVERITY,

R.NAME,

R.CREATION_DATE,

R.CLOSED_DATE,

CASE WHEN R.INCIDENT_STATUS_NAME = "Closed"

Then DiffDays(R.CREATION_DATE, R.CLOSED_DATE)

else

0

end as TAT_AS_LEAD_TIME

from SpiraTestEntities.R_Incidents as R

where

R.PROJECT_ID = ${ProjectId} and R.IS_DELETED = False and R.CLOSED_DATE IS NOT NULL and Year(R.CREATION_DATE) > 2020) as OQ

where OQ.TAT_AS_LEAD_TIME > 0 and OQ.TAT_AS_LEAD_TIME < 501

group by OQ.SEVERITY)

union

(select OQ.SEVERITY, 0 as BELOW_500, COUNT(OQ.TAT_AS_LEAD_TIME) as FROM_500_TO_750, 0 as ABOVE_750 from

(

select

R.INCIDENT_ID,

CASE WHEN R.SEVERITY_NAME is null then "0 - Unassigned" else R.SEVERITY_NAME end as SEVERITY,

R.NAME,

R.CREATION_DATE,

R.CLOSED_DATE,

CASE WHEN R.INCIDENT_STATUS_NAME = "Closed"

Then DiffDays(R.CREATION_DATE, R.CLOSED_DATE)

else

0

end as TAT_AS_LEAD_TIME

from SpiraTestEntities.R_Incidents as R

where

R.PROJECT_ID = ${ProjectId} and R.IS_DELETED = False and R.CLOSED_DATE IS NOT NULL and Year(R.CREATION_DATE) > 2020) as OQ

where OQ.TAT_AS_LEAD_TIME > 500 and OQ.TAT_AS_LEAD_TIME < 751

group by OQ.SEVERITY)

union

(select OQ.SEVERITY, 0 as BELOW_500, 0 as FROM_500_TO_750, COUNT(OQ.TAT_AS_LEAD_TIME) as ABOVE_750 from

(

select

R.INCIDENT_ID,

CASE WHEN R.SEVERITY_NAME is null then "0 - Unassigned" else R.SEVERITY_NAME end as SEVERITY,

R.NAME,

R.CREATION_DATE,

R.CLOSED_DATE,

CASE WHEN R.INCIDENT_STATUS_NAME = "Closed"

Then DiffDays(R.CREATION_DATE, R.CLOSED_DATE)

else

0

end as TAT_AS_LEAD_TIME

from SpiraTestEntities.R_Incidents as R

where

R.PROJECT_ID = ${ProjectId} and R.IS_DELETED = False and R.CLOSED_DATE IS NOT NULL and Year(R.CREATION_DATE) > 2020) as OQ

where OQ.TAT_AS_LEAD_TIME > 750

group by OQ.SEVERITY)) as FOQ

group by FOQ.SEVERITY

Query - Part 3 - Explanation

- In the OQ we created in Part 2, we have modified the SELECT clause and the where clause.

- In the SELECT clause, we apply the following logic to build three columns for our three duration set.

select OQ.SEVERITY, COUNT(OQ.TAT_AS_LEAD_TIME) as BELOW_500, 0 as FROM_500_TO_750, 0 as ABOVE_750

- In the WHERE Clause, we apply the following logic to bring data to the required columns.

where OQ.TAT_AS_LEAD_TIME > 0 and OQ.TAT_AS_LEAD_TIME < 501

- Then, we repeat another data set (repeating this entire logic) but apply for the second and third columns applying the required intervals for the days.

- All the three datasets are combined using a "UNION" clause.

- Finally, we aggregate this entire 3-part SQL data by severity summing the counts for three columns we created.

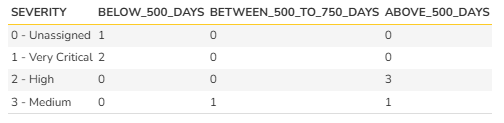

Query - Part 3 - Output

Given below is the result amenable for a graphical display. If you summarize the counts across the three column in this result set, we will see 8.

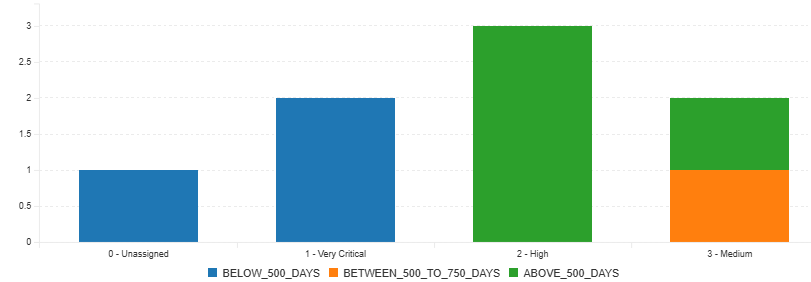

Query - Part 3 - Graph

Given below is the stacked bar chart that shows this data. You can see that "3 - Medium" severity has data aging in two different time-periods.

Additional Thoughts

- While this query used the notion of lead time (new to closed), if your business process requires cycle time eliminating response time, then the queries must change.

- Since my data had a large turnaround time, I used the x<501, 500<x<751, x>750 as the duration anchors. If your business process dictates a more granular timeframe (like less than 7 days, 8-14, 15-21, etc.), then the queries will have to be modified accordingly.

- It is generally recommended to review the turnaround time as a means to improve the process currently used. So, one can assess this based on severity, priority, type of incident, components or specific custom lists, etc. But, it is not recommended to analyze this by the owner of the incident.