August 22nd, 2016 by Adam Sandman

In the first article in this two-part series we discussed some of the reasons why it might not be desirable to spent the time to write formal test cases. Now this might seem like heresy for a company that sells a test management system to even say this! However to recap from last time, there are some good reasons:

- We have experienced testers who know the application and don't need to follow detailed test scripts

- We have inherited a large system with no formal test cases and we need to understand where the risks lie

- We want to spend more time testing the system and less time documenting it.

Please read last week's post if you want to get more backrgound on these!

Now in this week's article we're going to describing the Session-based Testing approach we took when testing SpiraTeam 5.0.

Basics of Session Based Testing

As described in this great article on session based testing, the idea is to take the concept of exploratory testing, where testers 'explore' the application, following their intuition, logging bugs and finding problems and apply some structure, without the straightjacket of scripted testing. What you do is take an area of the system or area of functionality and break it down into approximately 45 minute - 1 hour sessions that cover a specific high level objective and give the tester the freedom to follow an unstructured path to find the problems and issues lurking in that part of the system.

These session metrics are the primary means to express the status of the exploratory test process:

- Number of sessions completed

- Number of problems found

- Function areas covered

- Percentage of session time spent setting up for testing

- Percentage of session time spent testing

- Percentage of session time spent investigating problems

The key is that instead of measuring numbers of test cases, test steps and the % completion, you are focusing on the number of high level sessions and whether they turn up actual problems. If you have sessions that result in low levels of problems then you may need to refocus on different objectives or parts of the system.

Session-Based Testing with SpiraTest

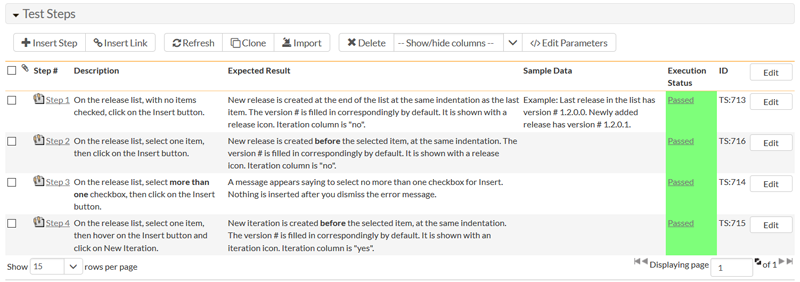

Normally when you use SpiraTest to do traditional scripted testing, you would have a business analyst create test cases with discrete test steps:

In this example, we have described each of the steps that a tester would need to take if they had never used the system before to achieve a very specific action (e.g. to create a release). However if all of the testers are familiar with the application and have used it before, it takes longer to write this test than execute. More importantly it misses out on all the nuances and edge cases that may lurk outside the scenario described in the script. The best testers find the issues that the business analyst or developer cannot even dream of!!

However it turns out that SpiraTest also is a very good session based testing and exploratory testing tool as well. With its ability to easily allow a tester to write down their observations, embed images into their descriptions and tie back what they did to the functional areas covered (i.e. the requirements), SpiraTest allows testers the freedom to explore, but gives them a powerful way to record their observations.

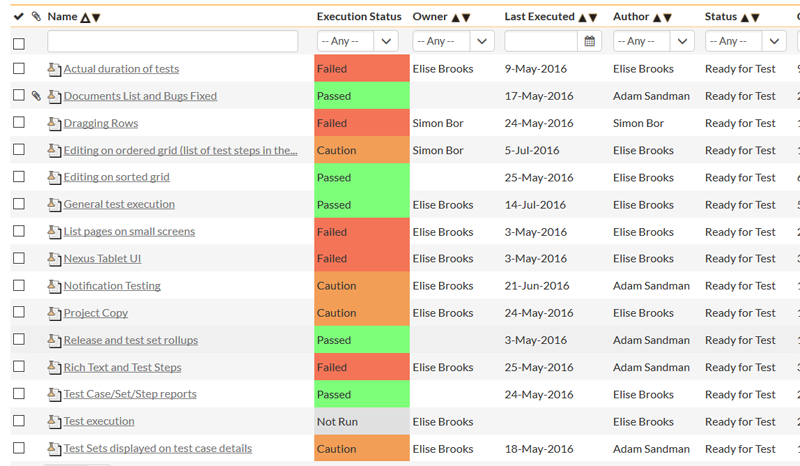

What we did with the testing of SpiraTeam 5.0 was to brainstorm a list of all the things that had changed and all of the areas that the developers felt were high risk, complex or "might contain a can of worms". We then wrote these into SpiraTeam as test cases:

Some of these were defined upfront, others were specified later based on the results of earlier tests. For example, one of the tests was to verify that the new drag and drop worked. When it was found that one type of grid (the ordered grid) wasn't working the same as the others, a new test case was written, with the objective to focus specifically on that grid.

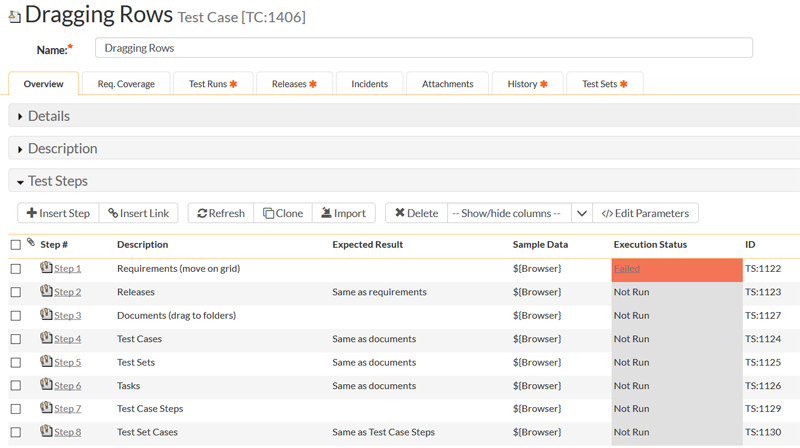

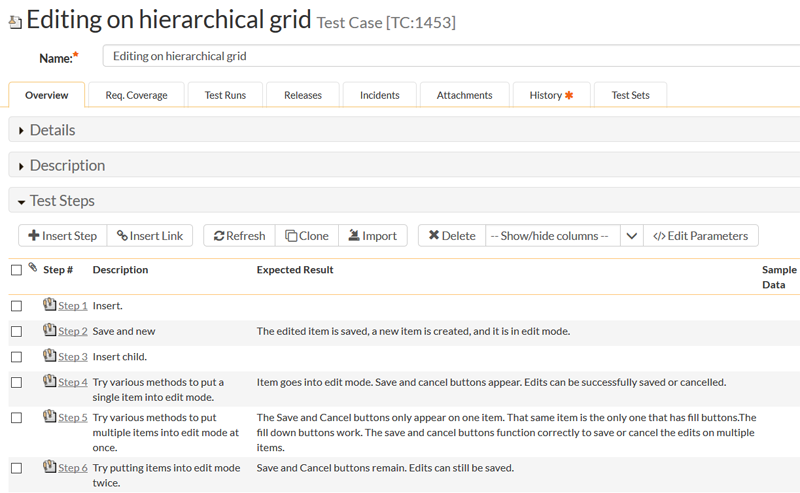

Now, just as important is how we write the "test steps" inside these session/exploratory "test cases":

In this example (which was written upfront during the design and development of that feature in the appropriate sprint), the steps are just an enumeration of the different cases that needed to be included when verifying the 'drag and drop' functionality. It didn't specify exactly what needed to be tested, just that dragging and dropping in the listed modules had been changed and could have lots of lurking issues.

In this second example, based on the experiences of the tester in the earlier testing activities, she wrote the steps almost as a checklist of things that she wanted to dive deeper into based on where she felt the problems would lie. This specific test was actually written down during the running of a previous one. That is great thing about this approach, you can run one test and write the next one at the same time.

Now that we have these tests defined, it's now time to run them.

Executing the Testing Sessions

Unlike in scripted testing, with exploratory / session based testing, the tester doesn't need to follow a specific script or set of actions. Instead she used the test case as a high level set of objectives and checklist of areas to explore that need particular attention.

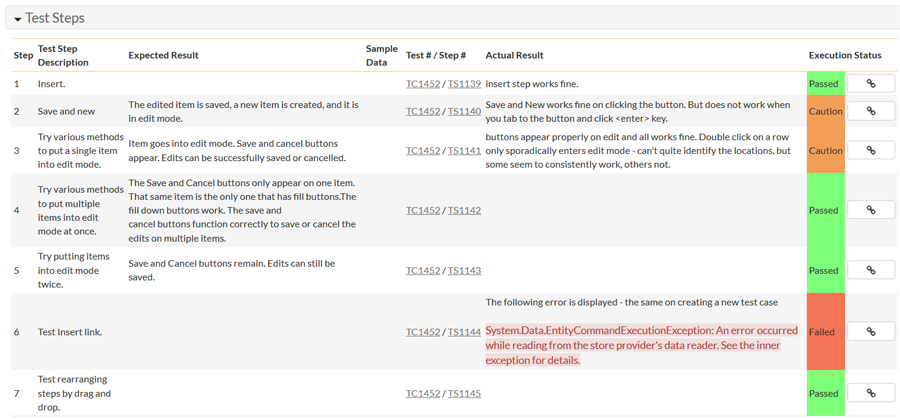

During the testing, the tester simply runs the test using the test execution wizard, however unlike traditional testing where the tester records simple Pass, Fail and a short description of what failed, with exploratory testing, she writes a more detailed account of what she's seeing during the testing, using the SpiraTest ability to embed images quickly and easily into the result.

As you can see in the example test run, the result looks a lot like a set of individual defect reports joined together in a sequence. In this example, she found a bunch of issues with the drag and drop functionality. At this point we didn't want to log 5 defects for the issues because the system wasn't yet ready for that, so it was much easier for us to keep track of this one testing artifact than 5 disconnected defects. In this stage of sprint, the functionality wasn't finished yet, so it was very helpful for the developers to get this early look into what issues are lurking,

Once the developers had finished the drag and drop functionality in this sprint and had made sure they had addressed all the identified problems in the test run, our tester could re-execute the same session test. Unlike exploratory testing, the structure of session based testing allows you to perform regression testing using the previous exploratory test as a regression test.

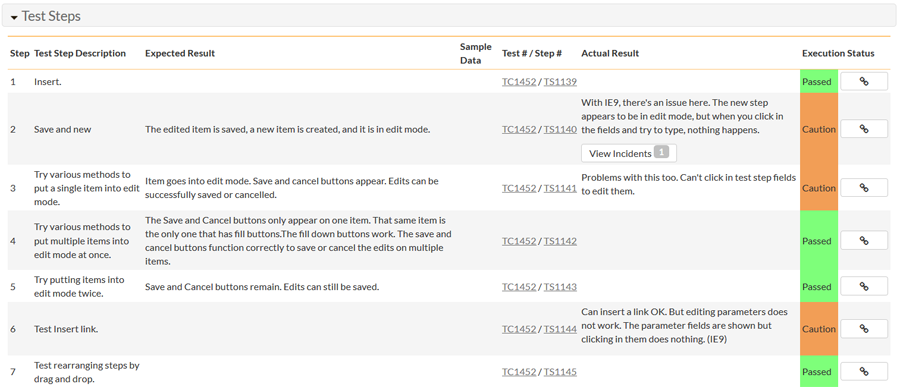

When the tester executed the same test a second time later in the sprint, the test run looked quite different:

In this case the functionality pretty much works correctly, the tester couldn't find any issues in most browsers even when spending considerable time testing the various features and trying all kinds of extreme cases. The exception was in IE9 which had some issues. In a traditional test we'd have needed to create a master test case with parameters and pass through the browser names for each combination. With session-based testing we can simply add the list of browsers to the test case description or just have them written into the release description (this release needs to support IE9+, Firefox, Chrome, etc.) and the testing knows to test all the options. This lets us spend more time testing and less time documenting.

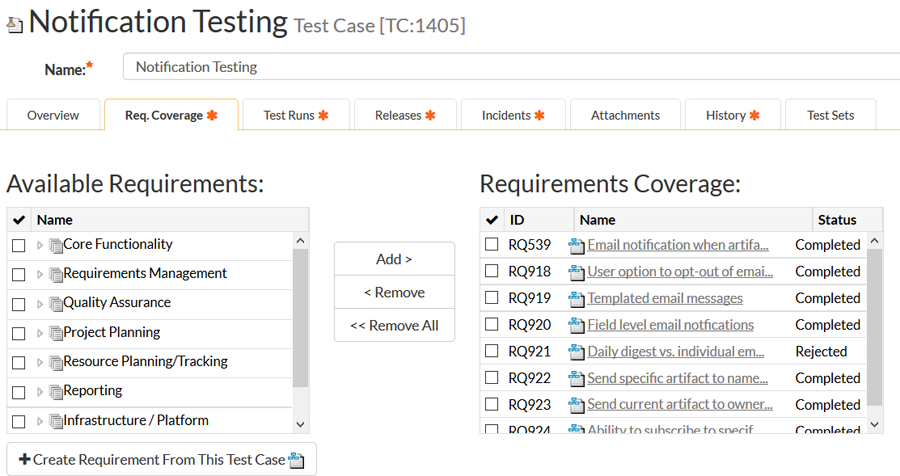

Finally one of the great benefits of using session-based testing rather than pure exploratory testing is that you can still tie back the tests to the requirements and features that are being tested, so you can still get test coverage metrics and know which parts of the system have the most risk:

Furthermore, one of the metrics for session based testing we mentioned at the beginning was number of function areas covered. When you use SpiraTest to manage your requirements and perform session-based testing, the requirements coverage tracking gives you this metric out of the box.

In Conclusion

As always in software development, it is important to use the right tool for the right job. As part of a comprehensive test strategy, you should ideally have unit testing, functional testing, performance testing designed and built into your process. However an important tool that is often overlooked is exploratory and free-form testing. In the past it was hard to measure, difficult to reproduce and poorly communicated. With SpiraTest and a session-based testing approach, you can apply a minimum amount of structure to free-form testing and get significant rewards. With session based testing you write your tests as they are performed, you build up a library of experience and future testing ideas, you have the ability to do regression testing, and for the team leadership, you have clear objectives, metrics and results that can be evaluated.