Test Automation Strategy Document Template & Explanation: Download Our Free Template Today

With the advancements in AI and software QA, test automation is becoming far more mainstream than even five years ago. However, implementing automated testing without a plan in place can waste resources, complicate workflows, and do more harm than good. This is where the test automation strategy document comes in. Keep reading to learn what it is, what it covers, and how you can create your own.

We’re not going to cover the basics of what test automation is in this article — for more background on this topic, see our article: What is Test Automation?

What is a Test Automation Strategy?

As the name suggests, a test automation strategy is a documented plan that defines how, when, where, and by whom test automation will be used in a software development lifecycle. It clarifies goals, scope, tools, processes, responsibilities, risks, metrics, and more. Essentially, a test automation strategy document encompasses everything your QA team needs to ensure that automating provides value rather than introducing unnecessary complexity.

Consequences of Not Using a Test Automation Strategy

Test automation strategies are becoming more and more critical as automated testing generally becomes more complex and influential. Teams that don’t implement a plan like this risk:

- Wasted Effort & Resources: Without a plan in place, teams may end up automating low-value or unstable tests that break often. Duplicated work can further lead to higher maintenance costs that waste resources.

- Poor Test Coverage: Without defined priorities, your test plan can have gaps and miss important functionalities or risk areas. In other words, testers might focus on “easy wins” instead of backend, integration, or error-edge flows.

- Slower Delivery: Without proper integration of automation, it delays feedback and slows you down. Tests aren’t run frequently, and bugs slip into later stages where they become more complex (and expensive) to fix.

- Unstable Testing Suites: Without preparation before testing, poor design decisions may be made and unstable test data can be used, resulting in brittle or flaky tests. The outcome is frequent false positives/negatives that degrade trust in your results.

- Difficulty Scaling: Without a set plan to guide evolution, your automated tests will quickly become unwieldy to maintain. Old tests break, and you’ll need a strategy for modularity, versioning, environment differences, etc. to maintain efficiency.

- Inability to Measure ROI: Without clear goals, metrics, and tracking systems in place, it’s difficult to judge whether automation is helping or not. This could lead to expensive investment that doesn’t show improvements in quality, speed, or cost.

Key Components of an Effective Test Automation Strategy Document

The most important fields and elements you should include in a test automation strategy are:

|

Test Automation Strategy Component |

What the Component Refers to |

|

Objectives and Goals |

Specific outcomes automation should achieve, expressed with measurable targets |

|

Scope |

Boundaries of what to automate (which features, modules, environments, platforms are/aren’t included) |

|

Categories of tests to automate |

|

|

Test Prioritization |

Deciding which tests are most critical so they are automated first |

|

Tools & Frameworks |

Automation technologies, libraries, frameworks, and structures chosen to match your stack and needs |

|

Test Data |

How data is generated, managed, isolated, cleaned, and used in tests |

|

Execution Strategy |

When, where, and how automated tests are run |

|

DevOps Integration |

How automation ties into CI/CD, version control, reporting, and feedback loops |

|

Risk & Limitations |

What automation can’t/shouldn’t do, what might go wrong, and how to mitigate |

|

Maintenance & Governance |

Who owns and updates tests, how often reviews/refactoring occur, and management of flaky/obsolete tests |

|

ROI Metrics |

Measures to track investment vs. benefit (e.g. time saved, defects avoided, etc.) |

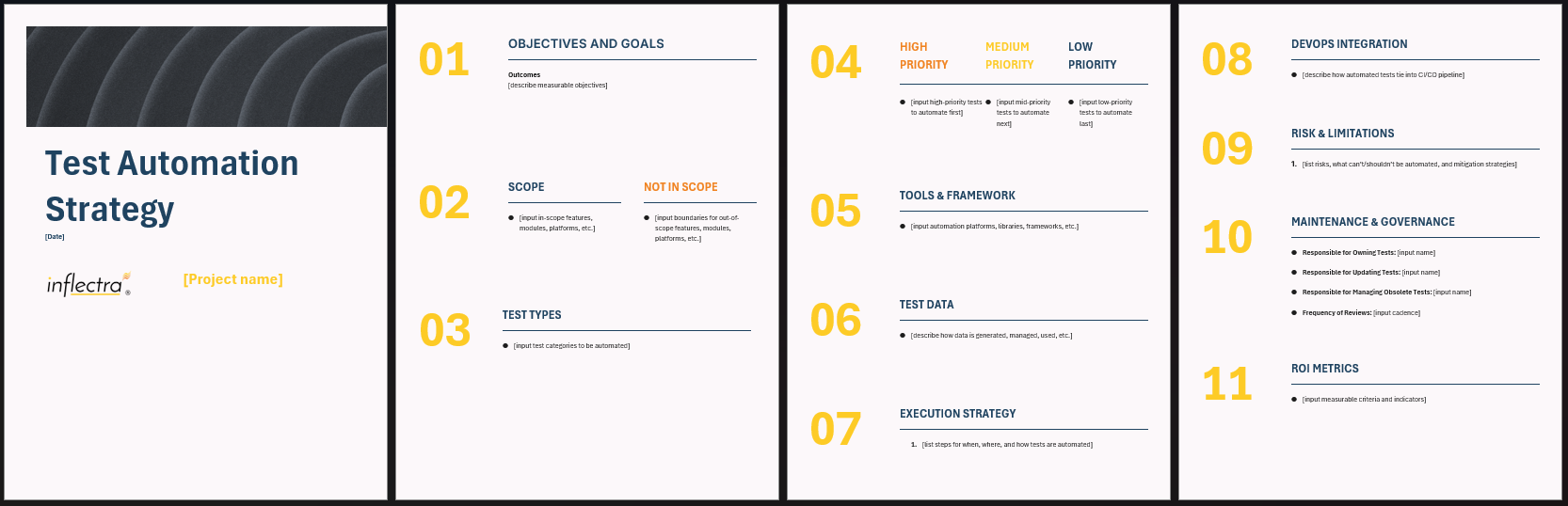

Test Automation Strategy Document Template

To help with all of this, we’ve created a free downloadable template to serve as a starting point for your next test automation strategy document:

Download our pre-built test automation strategy template here:

Benefits of Using a Test Automation Strategy Template

Avoiding blank page syndrome using a template instead of starting from scratch for your test automation strategy document comes with several key benefits:

- Consistency & Completeness: Using a template forces the team to fill out all components, making it less likely (if not impossible) to forget key areas. Because the formatting is always the same, it’s also easier to carry the same consistency across projects.

- Kick-Off Speed: Using a template is faster than inventing from nothing, making it faster (and easier) to get up and running. This also helps teams who are new to automation start with a strong baseline and build from there.

- Shared Understanding: Using a template defines the same sections for goals, responsibilities, scope, and more. This aligns QA, devs, product teams, and ops so that all stakeholders’ expectations are on the same page.

- Re-Use & Learnings: Using a template allows you to refine the format over time, capturing lessons from past projects (e.g. what needed more clarity, what was most valuable) to avoid repeating or reinventing flaws.

- Enhanced Traceability: Using a template typically means designated sections for metrics, approvals, reviews, and ownership. This makes ongoing maintenance, governance, and auditing easier.

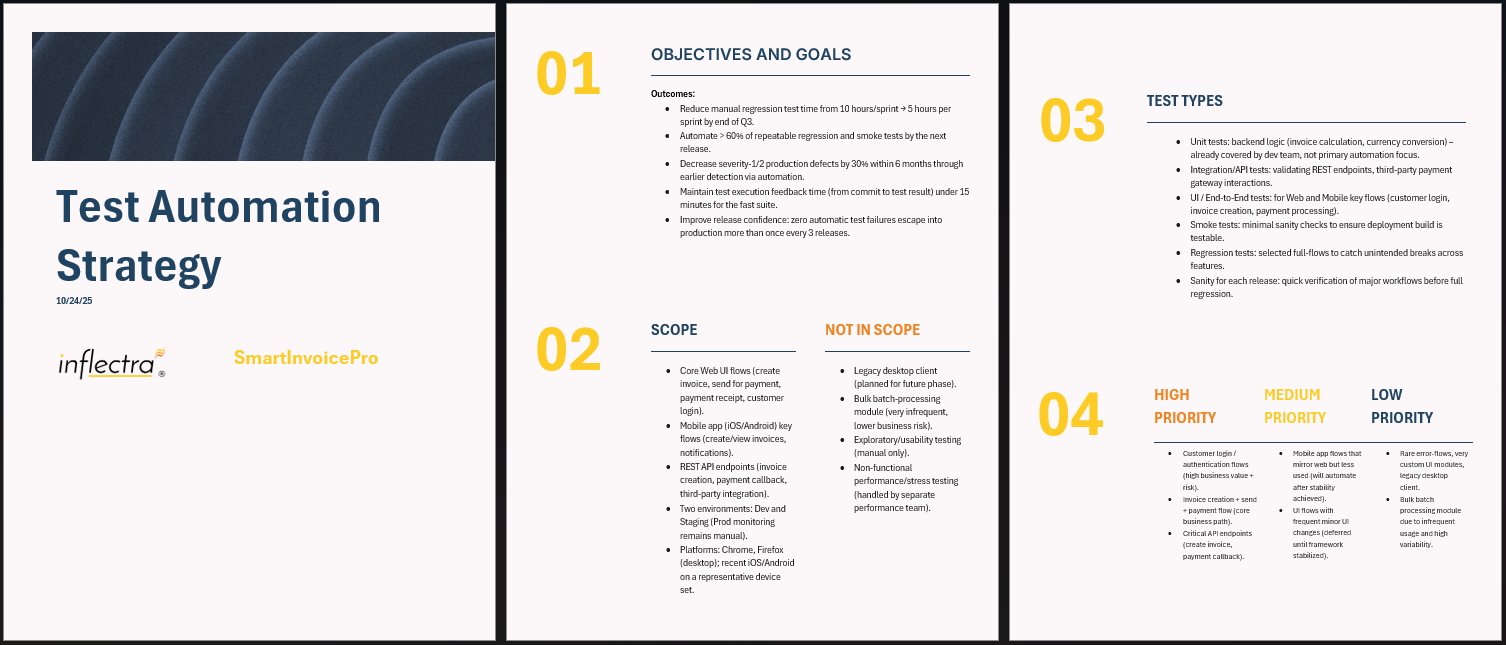

Test Automation Strategy Example

Using our template shown above, we’ve filled out each section with sample information, so you can see what a real (hypothetical) test automation strategy document could look like:

|

Test Automation Strategy Component |

Example Details |

|

Objectives and Goals |

Reduce regression testing time from 10h > 5h/sprint. Automate 60%+ of regression/smoke tests. Cut Sev-1/2 production defects by 30% in 6 months. Keep CI feedback <15 min per build. |

|

Scope |

Included: Core Web & Mobile invoice/payment flows, REST APIs, Dev & Staging envs. Excluded: Legacy desktop client, batch module, UX & performance testing. |

|

Test Types |

Unit (dev-owned), API/integration, UI/E2E (Web & Mobile), smoke, regression, sanity (pre-release) |

|

Test Prioritization |

High: Login, invoice creation/payment APIs. Medium: Mobile mirror flows. Low: Legacy or infrequent modules. |

|

Tools & Frameworks |

Rapise for automation (Web/Mobile/API), SpiraTest for test mgmt & traceability, Git for versioning, CI via Azure DevOps. |

|

Test Data |

Synthetic data via Rapise data-driven tests, seeded DB reset each run, masked prod-like data, isolated user/device profiles. |

|

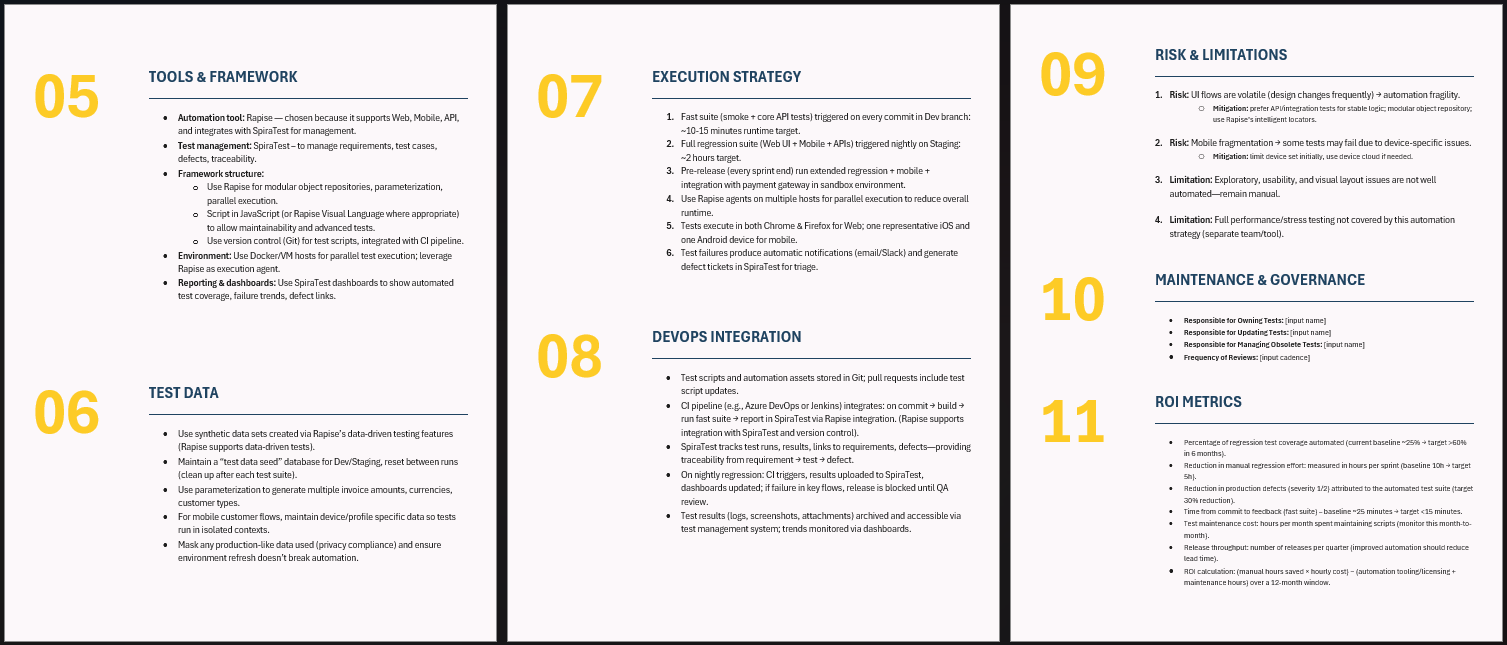

Execution Strategy |

Fast suite on every commit. Full regression nightly. Pre-release run per sprint. Parallelized via Rapise. |

|

DevOps Integration |

CI triggers tests > results to SpiraTest. Traceability from requirement > test > defect. Failures auto-create tickets. Dashboards show coverage & trends. |

|

Risk & Limitations |

UI volatility > test fragility. Device fragmentation > mobile variance. Exploratory & visual checks manual. |

|

ROI Metrics |

60% automated coverage target. 30% fewer high-severity bugs. Regression time cut 50%. CI feedback <15 min. Maintenance hours & release throughput tracked. |

How to Write a Test Automation Strategy Document in 10 Steps

Writing a test automation strategy document involves a rudimentary workflow that follows these general steps:

1. Define Scope

Start by considering which parts of the product are in or out for automation — this includes platforms (mobile, web, API), environments, constraints, etc. Try to be as concrete as possible with this step by listing specific modules or components. Your team should consider non-functional aspects in this step as well.

2. Identify Areas for Automation

Start organizing test cases and test types by whether they are a good candidate for automation (e.g. regression, integration, unit testing) or not. Another factor that might contribute to decisions here is determining which areas are stable vs. volatile. This step may involve a test case audit that examines how frequently each test is executed, which ones have the highest failure rates when performed manually, etc. We recommend not automating one-off, unstable, or extremely UI-heavy tests that change every sprint.

3. Establish Risks & Limitations

Now we can start thinking through the key hazards that might disrupt test automation. This could include flaky tests, environment drift, tool costs, and resource constraints. This should also include what automation doesn’t cover, as well as technical limitations like expensive performance testing setups. For each of these areas, it’s valuable to include some sort of mitigation plan (going beyond simply identifying risks).

4. Verify & Validate Data

Before moving ahead with testing, consider where test data comes from and how it will be managed. With AI becoming a more significant part of software development, will you use any synthetic data? It’s critical to ensure that your data (whether generated or real) is as close to production data as possible, but also emphasizes privacy and masking, like data anonymization.

5. Pick the Right Automated Testing Tools

Once you’ve established the scope, automation candidates, and risks, we can evaluate tools and frameworks that apply to your needs. The tool you choose should be compatible with your tech stack and have plenty of integration capabilities to minimize workflow changes. Support for parallel runs, multi-platform testing, and ease of maintenance are all aspects of a strong test automation tool (like Rapise).

Learn more about the best automated testing tools here.

6. Prioritize Critical Tests

With all of the information we’ve gathered so far, start surfacing which tests would deliver the most value early on. We recommend taking a risk-based prioritization approach to highlight tests that are more likely to catch bugs or are essential to business flows. For example, this might heavily focus on sanity tests, high-traffic user flows, payment flows, and security features. It’s helpful to use a test pyramid, starting with lots of unit tests, fewer integration tests, and even fewer end-to-end tests.

7. Design Tests

From there, it’s time to start designing and writing tests. Before you start writing, we recommend aligning with the team on how each test will be written (style, framework, design patterns, etc.), how code should be structured, and how to run them. This is also the time to discuss how everyone can ensure that tests are reliable and can handle timeouts, asynchronous behavior, retries, and more. It can be useful to incorporate mocking and stubbing where appropriate.

8. Group & Categorize Tests

Next, we can group tests (most teams use tags for this process) by type, scheduled vs. triggered, speed, resource usage, and more. For example, very fast unit tests should run on every commit. However, heavier performance or full UI tests can probably run overnight. While it’s important to use multiple sets of categorization, make sure that this doesn’t get out of hand — tagging and metadata should be maintainable, not a spiderweb of unimportant information.

9. Outline Test Execution Schedule

Before executing your tests, it’s important to agree on a run schedule. Discuss when certain tests should be executed (on commit, pull request, nightly, pre-release, etc.) as well as who needs to trigger them. Which environments will they be run in, and what is the expected turnaround time? In addition to assigning responsibility for who triggers each test, is there a different person responsible if failure occurs? Lastly, define acceptable durations of each phase to get a rough timeline of all automated testing activities. From here, you’re ready to begin running tests.

10. Continuous Review & Feedback

As tests are running, you’ll need to monitor, review, and adjust strategy accordingly. Track flaky tests, failure rates, maintenance cost, tool sustainability, and more to inform decisions. This step should also include feedback loops from dev, QA, and product teams. From experience, we’ve found it easiest to set periodic checkpoints (such as after every release) to collect important metrics and review tool performance. The insights you uncover from these checkpoints will inform whether the original test automation strategy is still sound, or if it needs to be adjusted.

Best Practices for Creating an Effective Test Automation Strategy Document

Whether you’re creating your test automation strategy from scratch or with a template, we recommend following these best practices to get the most value out of this document:

- Start Small: Don’t try to automate everything at once — begin your automation in one area, establish success, then incrementally expand. This will help validate your tool choices, identify pain points before they affect the entire stack, and gain buy-in from leadership.

- Use a Layered Approach: From our experience, heavy investment in unit and API/integration testing upfront provides more value faster than UI or end-to-end testing. Because of this, you may want to use a test pyramid and work your way up to end-to-end automation.

- Design for Maintainability: Using modular and reusable components, stable locators, and decoupled test data helps prevent brittle tests. Employ config files and don’t embed environment-specific UI locators, as these will create maintenance headaches down the road.

- Ensure Data Stability: It should go without saying, but environments and data both need to be stable and deterministic for effective testing. Ensure that your data is consistent, and reset or seed the data securely.

- Track & Monitor Test Health: It’s critical to track metrics and keep an eye on areas like false positive rate, average test execution time, pass/fail trends, etc. Dashboards like the ones provided by SpiraTest help you efficiently monitor the health of various tests from a single, intuitive pane of glass.

- Include Governance Review: Make sure to clearly assign responsibilities, like who writes tests, who reviews them, and who is in charge of maintaining them. Ambiguity in your plan can lead to poor accountability and finger-pointing.

- Be Realistic: Not everything can be automated, so be realistic about what tests are better for manual testing (e.g. usability testing, exploratory testing, etc.). Factor tool limitations and trade-offs into your decision and focus on automating what would benefit most from it.

- Adapt Over Time: Your team and processes need to remain Agile (even if you’re not using an Agile methodology). Stagnation in your strategies, team skills, and more can result in you falling behind competitors, so each test automation strategy document should evolve over time.

Enhance Your Test Automation Strategy with Inflectra’s Suite of QA Tools

However, templates can only take you so far. They’re usually generic in order to apply to many different industries and project types, and over-reliance on templates can lead to treating your core test automation strategy like a checklist instead of a true discussion. Both of these factors make it difficult to adapt as your product evolves, and may be incapable of accounting for emergent issues like unpredictable risks.

Our suite of QA software make it easy to not only automate, but enhance management of your testing. Leveraging AI-powered capabilities to speed up script creation means more time actually building your product, and more efficient quality engineered into every release.

- Rapise provides codeless test automation (as well as manual testing) that you don’t need extensive coding knowledge to use. It also includes automated recording and intelligent playback, self-healing tests, natural language test definitions, and more so your testing is enhanced from every angle.

- SpiraTest (and SpiraTeam/SpiraPlan) offer industry-leading test management, requirements tracking, ongoing reporting, and traceability. Our Spira suite is the core of our QA offerings, with flexible pricing and scalability to fit your needs so you don’t pay for what you don’t need.

- Inflectra.ai is embedded into Rapise and Spira to extend their impressive capabilities even further with fully-compliant AI and ML models. This ranges from faster test case generation to scenario suggestions and even risk identification, providing a true copilot for development and QA.

Inflectra platforms’ combination of AI, codeless features, broad platform support, unmatched traceability, and modularity make it an incredibly powerful set of tools for any QA team. Still not convinced? Hear from our partners about what makes Inflectra an indispensable part of their SDLC or request a demo to see how we can support your test automation strategy today.